AI Startup

"420,000 AI Models: FriendlyAI's Ecosystem Vision"

Dong-A Ilbo |

Updated 2025.08.18

“Currently, 10% to 20% of GPU usage is dedicated to training, while the rest is allocated to inference. Training is the process of creating AI models using computing resources, and inference is the process of sending queries to the created model and receiving responses. FriendliAI has the technology to efficiently handle inference and can be said to be the global company that delves the deepest into this area.”

As of August 2025, the number of AI models registered on Hugging Face amounts to 1,933,683. All open-source-based AI models and datasets worldwide are shared through Hugging Face. In this process, users must choose an inference infrastructure provider to deploy AI models, and the final service efficiency and fees vary depending on the provider chosen. Among them, the most notable inference service provider is FriendliAI.

In January, the startup FriendliAI formed a strategic partnership with Hugging Face and was added as an official deployment option. In March, FriendliAI expanded its scope by supporting multimodal AI. Currently, FriendliAI supports 418,000 out of the 1.9 million AI models. This highlights the company's growing importance and recognition. An interview with Jeon Byeong-gon, CEO of FriendliAI, was conducted to discuss the main goals and direction of FriendliAI, as well as its identity as a startup.

“The core of the service is its proprietary optimization technology, Continuous Batching”

Jeon Byeong-gon, CEO of FriendliAI, has been a professor at the Department of Computer Science at Seoul National University since 2013 and founded FriendliAI in January 2021 as a faculty startup. He graduated from the Department of Electronic Engineering at Seoul National University and obtained his master's and doctoral degrees in computer science from Stanford University and UC Berkeley. After holding key AI-related positions at Intel, Yahoo Research, and Microsoft, he returned to Korea. Jeon stated, “Since 2013, I have been conducting research related to AI software and have been interested in and challenged by research topics that can be practically used outside the lab. FriendliAI is a good example of commercializing research results.”

Regarding the motivation for founding the company, he said, “What can be done in academia and what can be done in a company are clearly different. While the impact of researching and publishing papers in academia is good, I wanted to create products beyond papers to have a greater impact on the world. FriendliAI's mission today is to make accelerated inference accessible to everyone, and through entrepreneurship, we are consistently achieving that goal.”

FriendliAI's pioneering core technology in the inference field is ‘Continuous Batching,’ which continuously processes data when handling requested tasks. Jeon stated, “Among the papers we have published, the technology at the core of AI inference is Continuous Batching. Continuous Batching can be said to be the starting point of the LLM inference industry, and its importance is such that the industry is divided into before and after the advent of this technology. The open-source code for LLM, vLLM, was also born thanks to Continuous Batching technology. It has become a standard in the LLM field.”

He continued, “FriendliAI's competitiveness is based on proprietary technologies such as its own kernel library optimized for code executed on GPUs, efficient handling of dynamically changing tensors, and unique optimization techniques related to quantization and multimodal caching. Competitors like Fireworks tend to modify and use vLLM, but FriendliAI uses its own runtime, which fundamentally gives it an advantage. Although we are a market-leading company now, we are continuously conducting research and development and advancing to maintain this momentum.”

Efforts by FriendliAI to Secure the Technology Ecosystem

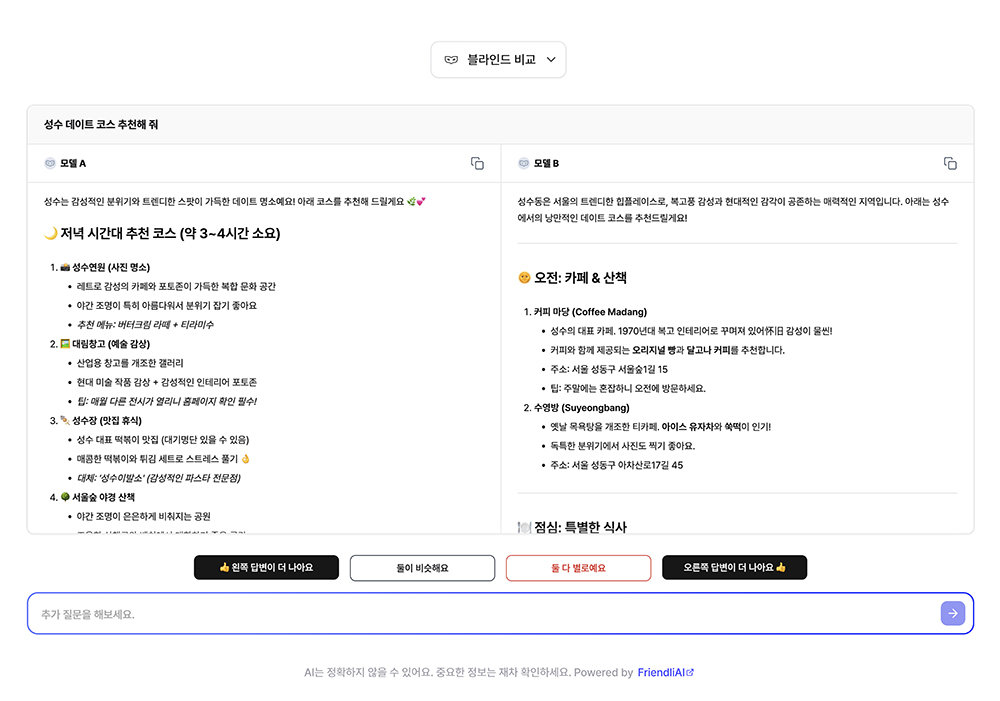

This year, they have started new projects to contribute to the AI ecosystem. A representative example is the AI evaluation platform ‘World Best AI (WABA),’ unveiled on the 6th. WABA is a service that blind tests various open-source language models, allowing anyone to access and directly evaluate the performance of large language models. Users compare the responses of two AIs to the same question and choose which one is superior or if they are at a similar level. After selection, the identity of each model is revealed.

Currently, most AI performance is evaluated only by benchmarks that solve predetermined problems. Even AI with high benchmark scores may have lower perceived performance when actually used. Blind evaluation using WABA is an intuitive approach to understanding how superior a specific AI model's usability is compared to others in real usage environments.

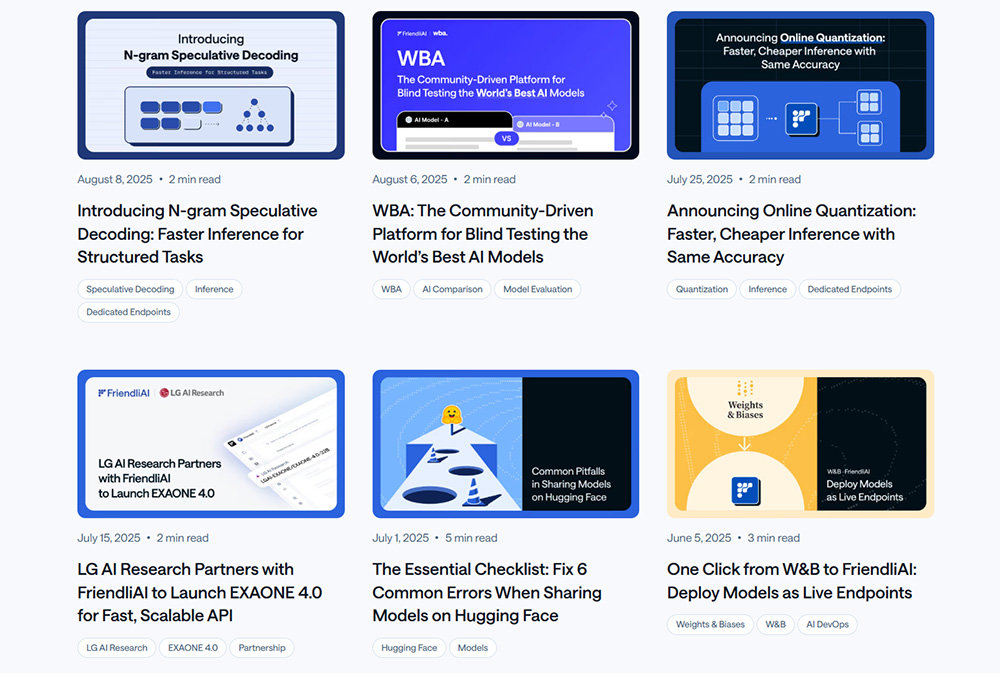

Technological innovation is also ongoing. At the end of July, the online quantization feature was made available for use in services, and last week, the N-gram Speculative Decoding feature was also launched in the service. Quantization is a technique that reduces the precision of AI models to enhance memory and work efficiency. Generally, to process AI models quickly, they must be pre-quantized and uploaded, but FriendliAI's unique technology allows for rapid quantization at the endpoint while maintaining model quality. N-gram Speculative Decoding is a technology that increases inference speed without changing the model by using the ‘N-gram’ pattern, which divides text into fixed-length segments.

In this way, FriendliAI consistently introduces new technologies to the market in line with the rapidly evolving AI market, which is a key factor in maintaining its advantage over other competitors.

Leading AI Companies as Clients, but Continuously Focusing on Marketing and R&D

Despite receiving love calls from many companies, including Hugging Face, Jeon Byeong-gon remains grounded. He stated, “When I first started the business, I went to Silicon Valley empty-handed and struggled. What I realized then was the importance of growing together within the AI ecosystem and playing a significant role. In contemplating the most effective path related to AI inference, I partnered with Hugging Face. By entering the ecosystem and emerging as a key company, we raised our recognition to where we are now.”

He continued, “We are continuously hiring for overseas sales and business model enhancement and consistently conducting marketing. Inbound marketing is mainly through Hugging Face, Google Ads, and LinkedIn. Outbound marketing now involves receiving many invitations to Silicon Valley conferences. In June, I participated in W&B's Fully Connected and Arize AI's Observe 2025, where I presented. We are participating in events with significant effects, such as hackathons and community building.”

In Korea, many AI companies are in partnership with FriendliAI. Jeon stated, “Major clients include SK Telecom, KT, Upstage, LG AI Research, Twelve Labs, and Scatter Lab. For example, when Upstage provides Solar LLM inference functionality to its clients, we assist in this process. The recently announced LG AI Research's Exaone 4.0 is also serviced through our platform at one-tenth the cost compared to GPT.” He added, “The reason one-tenth is possible is that the model itself is lightweight, and we utilize GPUs faster and more efficiently than competitors.”

Focusing on a Single Goal and Creating an Honest and Collaborative Corporate Culture

The topic shifted to the organizational culture of FriendliAI as a startup rather than a tech company. Jeon explained, “A startup is an environment with many changes. Even if a goal is set, if new signals are detected in the market, the path must be adjusted or new goals set. Also, to quickly learn new things in a changing environment, a spirit of inquiry into technology is essential.”

He added, “In a startup, while the tasks assigned to each person are important, it is also necessary to define and find tasks that are not assigned and carry them out. Team collaboration, which values the results created together beyond individual achievements, is also important. Internally, it would be good to have a system in place, but given the fast-paced market situation, it is necessary to adapt well and define and perform tasks with the right mindset.”

Regarding the current organizational structure, he explained, “There were already many experienced developers, and recently, experienced individuals related to AI model performance optimization have joined. There are also infrastructure engineers ensuring the service runs stably 24/7, and members in system design and user experience. Developers prefer intuitive, easy-to-use solutions, so we emphasize these aspects. We are also currently recruiting in the U.S.”

Jeon stated, “FriendliAI is a culture that helps each member focus on their own goals and believes in the value of self-direction. Being honest with each other, solving both what can be done and what is difficult together, is considered a virtue. Our goal is to communicate and collaborate to succeed together.”

Planning to Launch ‘Friendly Agent’ in Q4 and Considering Investment Rounds

FriendliAI's next goal is the AI agent, with the next direction already outlined. Jeon stated, “Currently, revenue is stable, and preparations are needed to expand the business scale. The plan is to widely promote Friendly Suite in the market, and we also plan to launch our AI agent in the fourth quarter of this year. This model will provide inference functionality at the agent layer. We also plan to support new types of GPUs and are preparing many product aspects for launch. We aim to achieve sales targets in the North American market and plan to enter the next investment round next year.”

Finally, Jeon described FriendliAI as a company ‘in the eye of the storm.’ He stated, “FriendliAI is one of the companies at the center of the global whirlwind of artificial intelligence. I believe it is the company most closely connected to the center in Korea. The eye of the storm is calm at its center, but if it remains still, it will be swept away. To avoid this, I will lead FriendliAI with the mindset of following the direction the storm is moving and surviving to become the company needed to piece together all the puzzles in the AI market.”

IT Donga Nam Si-hyun Reporter (sh@itdonga.com)

As of August 2025, the number of AI models registered on Hugging Face amounts to 1,933,683. All open-source-based AI models and datasets worldwide are shared through Hugging Face. In this process, users must choose an inference infrastructure provider to deploy AI models, and the final service efficiency and fees vary depending on the provider chosen. Among them, the most notable inference service provider is FriendliAI.

Jeon Byeong-gon, CEO of FriendliAI / Source=IT Donga

In January, the startup FriendliAI formed a strategic partnership with Hugging Face and was added as an official deployment option. In March, FriendliAI expanded its scope by supporting multimodal AI. Currently, FriendliAI supports 418,000 out of the 1.9 million AI models. This highlights the company's growing importance and recognition. An interview with Jeon Byeong-gon, CEO of FriendliAI, was conducted to discuss the main goals and direction of FriendliAI, as well as its identity as a startup.

“The core of the service is its proprietary optimization technology, Continuous Batching”

Jeon Byeong-gon, CEO of FriendliAI, has been a professor at the Department of Computer Science at Seoul National University since 2013 and founded FriendliAI in January 2021 as a faculty startup. He graduated from the Department of Electronic Engineering at Seoul National University and obtained his master's and doctoral degrees in computer science from Stanford University and UC Berkeley. After holding key AI-related positions at Intel, Yahoo Research, and Microsoft, he returned to Korea. Jeon stated, “Since 2013, I have been conducting research related to AI software and have been interested in and challenged by research topics that can be practically used outside the lab. FriendliAI is a good example of commercializing research results.”

Regarding the motivation for founding the company, he said, “What can be done in academia and what can be done in a company are clearly different. While the impact of researching and publishing papers in academia is good, I wanted to create products beyond papers to have a greater impact on the world. FriendliAI's mission today is to make accelerated inference accessible to everyone, and through entrepreneurship, we are consistently achieving that goal.”

Comparison of processing AI computations with Continuous Batching (dark blue) and general processing (white) / Source=FriendliAI

FriendliAI's pioneering core technology in the inference field is ‘Continuous Batching,’ which continuously processes data when handling requested tasks. Jeon stated, “Among the papers we have published, the technology at the core of AI inference is Continuous Batching. Continuous Batching can be said to be the starting point of the LLM inference industry, and its importance is such that the industry is divided into before and after the advent of this technology. The open-source code for LLM, vLLM, was also born thanks to Continuous Batching technology. It has become a standard in the LLM field.”

He continued, “FriendliAI's competitiveness is based on proprietary technologies such as its own kernel library optimized for code executed on GPUs, efficient handling of dynamically changing tensors, and unique optimization techniques related to quantization and multimodal caching. Competitors like Fireworks tend to modify and use vLLM, but FriendliAI uses its own runtime, which fundamentally gives it an advantage. Although we are a market-leading company now, we are continuously conducting research and development and advancing to maintain this momentum.”

Efforts by FriendliAI to Secure the Technology Ecosystem

FriendliAI recently launched the ‘WABA’ service, a blind test-based AI evaluation platform / Source=FriendliAI

This year, they have started new projects to contribute to the AI ecosystem. A representative example is the AI evaluation platform ‘World Best AI (WABA),’ unveiled on the 6th. WABA is a service that blind tests various open-source language models, allowing anyone to access and directly evaluate the performance of large language models. Users compare the responses of two AIs to the same question and choose which one is superior or if they are at a similar level. After selection, the identity of each model is revealed.

Currently, most AI performance is evaluated only by benchmarks that solve predetermined problems. Even AI with high benchmark scores may have lower perceived performance when actually used. Blind evaluation using WABA is an intuitive approach to understanding how superior a specific AI model's usability is compared to others in real usage environments.

FriendliAI continues to update new features in line with the rapid changes in the AI market / Source=FriendliAI

Technological innovation is also ongoing. At the end of July, the online quantization feature was made available for use in services, and last week, the N-gram Speculative Decoding feature was also launched in the service. Quantization is a technique that reduces the precision of AI models to enhance memory and work efficiency. Generally, to process AI models quickly, they must be pre-quantized and uploaded, but FriendliAI's unique technology allows for rapid quantization at the endpoint while maintaining model quality. N-gram Speculative Decoding is a technology that increases inference speed without changing the model by using the ‘N-gram’ pattern, which divides text into fixed-length segments.

In this way, FriendliAI consistently introduces new technologies to the market in line with the rapidly evolving AI market, which is a key factor in maintaining its advantage over other competitors.

Leading AI Companies as Clients, but Continuously Focusing on Marketing and R&D

Despite receiving love calls from many companies, including Hugging Face, Jeon Byeong-gon remains grounded. He stated, “When I first started the business, I went to Silicon Valley empty-handed and struggled. What I realized then was the importance of growing together within the AI ecosystem and playing a significant role. In contemplating the most effective path related to AI inference, I partnered with Hugging Face. By entering the ecosystem and emerging as a key company, we raised our recognition to where we are now.”

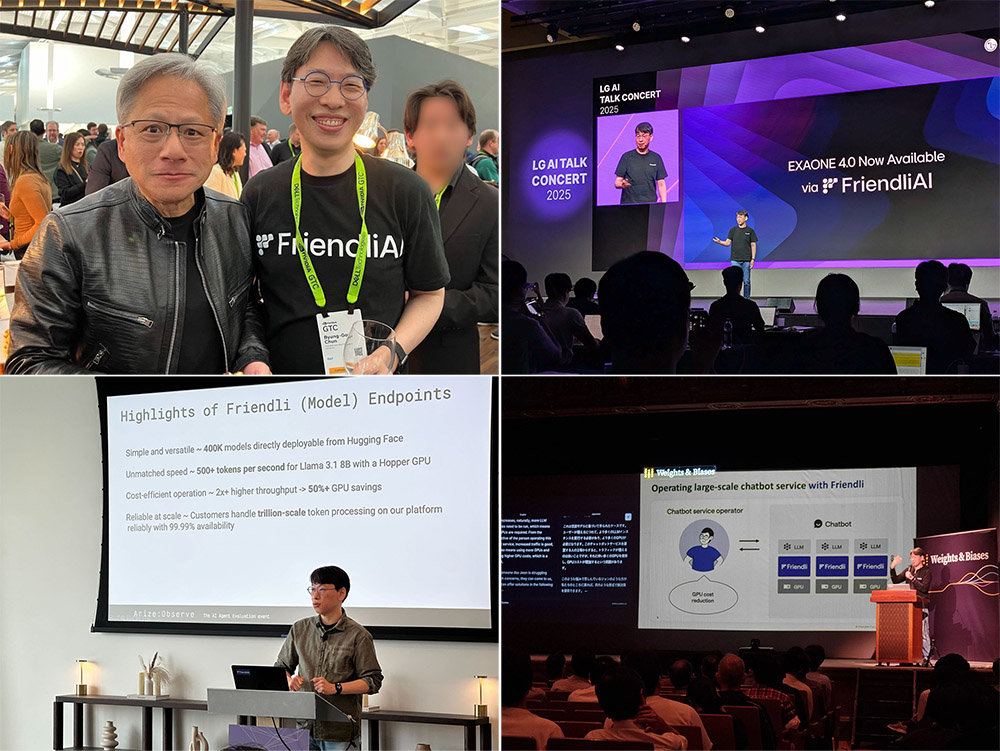

Jeon Byeong-gon consistently presents FriendliAI's technology both domestically and internationally, contributing to the ecosystem's development / Source=Jeon Byeong-gon's LinkedIn

He continued, “We are continuously hiring for overseas sales and business model enhancement and consistently conducting marketing. Inbound marketing is mainly through Hugging Face, Google Ads, and LinkedIn. Outbound marketing now involves receiving many invitations to Silicon Valley conferences. In June, I participated in W&B's Fully Connected and Arize AI's Observe 2025, where I presented. We are participating in events with significant effects, such as hackathons and community building.”

In Korea, many AI companies are in partnership with FriendliAI. Jeon stated, “Major clients include SK Telecom, KT, Upstage, LG AI Research, Twelve Labs, and Scatter Lab. For example, when Upstage provides Solar LLM inference functionality to its clients, we assist in this process. The recently announced LG AI Research's Exaone 4.0 is also serviced through our platform at one-tenth the cost compared to GPT.” He added, “The reason one-tenth is possible is that the model itself is lightweight, and we utilize GPUs faster and more efficiently than competitors.”

Focusing on a Single Goal and Creating an Honest and Collaborative Corporate Culture

The topic shifted to the organizational culture of FriendliAI as a startup rather than a tech company. Jeon explained, “A startup is an environment with many changes. Even if a goal is set, if new signals are detected in the market, the path must be adjusted or new goals set. Also, to quickly learn new things in a changing environment, a spirit of inquiry into technology is essential.”

Jeon Byeong-gon consistently presents FriendliAI's technology both domestically and internationally, contributing to the ecosystem's development / Source=Jeon Byeong-gon's LinkedIn

He added, “In a startup, while the tasks assigned to each person are important, it is also necessary to define and find tasks that are not assigned and carry them out. Team collaboration, which values the results created together beyond individual achievements, is also important. Internally, it would be good to have a system in place, but given the fast-paced market situation, it is necessary to adapt well and define and perform tasks with the right mindset.”

Regarding the current organizational structure, he explained, “There were already many experienced developers, and recently, experienced individuals related to AI model performance optimization have joined. There are also infrastructure engineers ensuring the service runs stably 24/7, and members in system design and user experience. Developers prefer intuitive, easy-to-use solutions, so we emphasize these aspects. We are also currently recruiting in the U.S.”

Jeon stated, “FriendliAI is a culture that helps each member focus on their own goals and believes in the value of self-direction. Being honest with each other, solving both what can be done and what is difficult together, is considered a virtue. Our goal is to communicate and collaborate to succeed together.”

Planning to Launch ‘Friendly Agent’ in Q4 and Considering Investment Rounds

FriendliAI is creating a proactive work culture while keeping pace with the rapid changes in the AI market / Source=IT Donga

FriendliAI's next goal is the AI agent, with the next direction already outlined. Jeon stated, “Currently, revenue is stable, and preparations are needed to expand the business scale. The plan is to widely promote Friendly Suite in the market, and we also plan to launch our AI agent in the fourth quarter of this year. This model will provide inference functionality at the agent layer. We also plan to support new types of GPUs and are preparing many product aspects for launch. We aim to achieve sales targets in the North American market and plan to enter the next investment round next year.”

Finally, Jeon described FriendliAI as a company ‘in the eye of the storm.’ He stated, “FriendliAI is one of the companies at the center of the global whirlwind of artificial intelligence. I believe it is the company most closely connected to the center in Korea. The eye of the storm is calm at its center, but if it remains still, it will be swept away. To avoid this, I will lead FriendliAI with the mindset of following the direction the storm is moving and surviving to become the company needed to piece together all the puzzles in the AI market.”

IT Donga Nam Si-hyun Reporter (sh@itdonga.com)

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News