AI Startup

RabbleUp CEO: Pioneering Modular, Quantifiable AI Era

Dong-A Ilbo |

Updated 2025.09.25

The technology conference 'lab | up 〉 /conf/5' by AI development platform company Lablup was held on the 24th at the aT Center in Yangjae, Seocho-gu, Seoul. This year's conference, themed 'Make AI Composable,' featured participation from Lablup's major partners, sharing cases on the composability and scalability of current-generation artificial intelligence (AI) technology. Lablup is gaining attention in the infrastructure and AI industry with its GPU efficiency maximization technology and AI infrastructure management platform 'Backend.AI.' In August of this year, Lablup also became widely known to the public by participating in the 'Independent AI Foundation Model' construction project with Upstage.

Shin Jeong-kyu, CEO of Lablup, began his presentation by stating, “In recent years, the AI field has undergone a transformation akin to an industrial revolution. Today, various entities are active in the AI industry, ranging from small companies handling small AI models to those challenging global pipelines or artificial general intelligence. Lablup is building services with the intention to support all areas from AI edge to hyperscale.”

The ultimate goal is to quantitatively measure intelligence demand

The keynote speech was an opportunity to share a blueprint for Lablup's past 10 years and plans for the next 10 years. CEO Shin stated, “For the past 50 years, humans have been replacing knowledge work through computers, and today we have reached a stage where we search and research materials using computers instead of libraries. Since 2022, deep learning-based generative AI models have started gaining attention, and since GPT-3, there is an attempt to replace human creative activities.” He also mentioned that humanity has always devised ways to measure, evaluate, and quantify new technologies as they emerge.

For instance, the physical power exerted by a horse is called '1 horsepower.' Despite the variance in power among horses, it has been standardized and utilized in industrial sites worldwide. Thanks to this, the physical power units of cars or engines can be expressed in horsepower and quantified in terms of tens or hundreds of times. CEO Shin believes that if AI processing results can be measured as quantifiable indicators like 'intelligence demand' or 'intelligence consumption,' it would not only objectively indicate performance but also contribute to the step-by-step performance improvement of AI.

The background for setting such a goal lies in Lablup's characteristics. They are positioned at the center of hardware and software. CEO Shin stated, “Lablup quickly identifies the characteristics of AI semiconductors and quantitatively measures performance more than any other company. We partner with many developers and companies to define how to supply and mass-produce AI and focus on building platforms.”

He continued, “In 2023, we released platforms such as PALI and PALANG, and this year we are supporting the market through the fault-tolerant (focused on normal operation despite errors) LLM and multimodal automation management system 'Continuum' and the omnimodal-based generative AI development platform 'AI:DOL.'”

Additionally, Lablup's core service, Backend.AI version 25.14, is evolving with hardening features and solutions like Backend.AI Doctor for automatic diagnosis and recovery. CEO Shin expressed, “While it is difficult to foresee the next 10 years, we can clearly see two years ahead. ChatGPT, which was prevalent in 2023, is now realized at the smartphone level. Such developments will be realized in smaller infrastructure or fields, while hyperscalers will grow larger. Lablup aims to become a company that develops, supplies, and quantifies intelligence in an era of various scales and platforms.”

Preparing for the era of composable AI··· Offering solutions with Backend.AI

Kim Jun-ki, Lablup's Chief Technology Officer (CTO), shared Lablup's field experiences and the latest updates under the theme 'Composable AI, Composable Software.' CTO Kim explained, “Recently, various cases utilizing composition have emerged, especially centered around the AI coding field. This is why Lablup emphasizes the keyword 'composable.' In the future, composable structures will become very important in the computing industry.”

He continued, “From a developer's perspective, composability is akin to conceptualizing programs through abstraction methods (a programming technique based on core content), and when focusing on AI, it is about how to quantify and graft the functions required by the service.

CTO Kim states that achieving composable AI requires both horizontal expansion and vertical integration. Horizontal expansion can be achieved by optimizing tasks composed of hundreds or thousands of containers across dozens to hundreds of nodes or through advanced scheduling. Vertical integration implies the need for comprehensive access to storage, infrastructure, and server connectivity throughout the program.

A case that well demonstrates horizontal expansion and vertical integration is the independent AI foundation project in which Lablup is participating. Lablup is currently supporting the construction of a Korean AI through the Upstage consortium, providing Backend.AI as a distributed deep learning training platform. Depending on the continuity of the project, they plan to continue cooperation in advancing GPU virtualization technology and LLM evaluation and verification. Lablup is approaching the management of infrastructure for the national AI foundation through 'horizontal expansion.'

CTO Kim stated, “The model currently being built is an MoE (Mixture of Experts) based LLM with 100 billion parameters. A cluster of 504 NVIDIA B200s is mobilized for training. During the work, unexpected errors or operation stops occur, and problems arise in storage. To solve the problem, tasks such as rewriting thousands of schedulers were organized by reusing the existing process if there were no issues when attempting the same task.” This approach, which considers all environmental variables of hardware and technically consolidates AI infrastructure technology and services, is horizontal expansion.

Vertical integration encompasses the entire process from the hardware or driver at the end to the interface of the final user. It involves connecting special CPU structures and GPUs, mobilizing them for computation tasks, and managing libraries or drives comprehensively. Ultimately, consolidating horizontal expansion and vertical integration is necessary to advance towards composable AI in the future.

CTO Kim stated, “If AI agents are to communicate with each other and handle larger-scale AI in the future, GPUs must be well-managed and optimized. Confidential computing, which has been commercialized in the cloud industry, should now be applied to the AI industry, and costs should be reduced by optimizing with disaggregation for distributed LLM serving. Lablup will provide vertical integration through Backend.AI and help operate AI most efficiently.”

Rebellions, HyperExcel, Naver Cloud, ETRI, and more participate

As a company that serves as a focal point for AI hardware and software, numerous companies participated in the sessions. Major domestic AI companies such as Nota, Rebellions, Lotte Innovate, Miracom I&C, Modu Research Institute, More, Aim Intelligence, and SK Telecom, as well as cloud content providers like KT Cloud, Microsoft, Naver Cloud, and NHN Cloud, participated.

Rebellions participated with Kim Hong-seok, head of software architecture, introducing the vLLM support method and technology support ecosystem based on Rebellions' Neural Processing Unit (NPU). vLLM is a toolset for LLM inference and service operation. Rebellions NPU can use various functions of vLLM without separate code modification and supports various MoE models (Mixture of Experts) necessary for enhancing the performance and efficiency of LLM through the AI open-source library platform 'Hugging Face.' They also plan to assist hardware support in the form of vLLM plugins in the future.

HyperExcel participated with CTO Jinwon Lee presenting on the theme 'Making Semiconductors for AI.' Although there was no specific product news, he mainly mentioned what they focus on in AI semiconductor development. The LLM inference process is divided into a prefill stage, which requires a lot of computation, and a decode stage, where memory performance is important. However, the problem is that current-generation GPUs have a poor power consumption-to-performance ratio for handling this.

Moreover, with the emergence of AI agents, conversation lengths are expected to increase, and the frequency of real-time processing of interactions is expected to rise. This means that demand may be distributed to GPUs specialized in computation or AI semiconductors specialized in inference. HyperExcel is configuring products to be advantageous for tasks with frequent memory access, and other AI semiconductor companies must secure competitiveness by strengthening their unique strengths.

In the cloud industry, Justin Yoo, Senior Cloud Advocate at Microsoft, presented on 'Operating ChatGPT in a Closed Network,' and Naver Cloud's Park Bae-seong, AICS Tech Leader, introduced acceleration technologies for running LLMs quickly and cheaply in various scenarios. NHN Cloud's Jung Min, GPU System Engineer, introduced the construction of high-performance computing infrastructure and the overall system operation and construction of NHN Cloud.

Lablup aims to become a company that quantifies and supplies intelligence

CEO Shin stated, “When we founded Lablup 10 years ago, some laughed when we said we would contribute to technology that could replace human intellectual and creative activities. But now everyone knows that is not the case. Although the challenge is abstract, the goal for the next 10 years is to become a company that supplies intelligence.”

Quantifying intelligence and knowledge is theoretical. However, considering the recent pace of development in the AI industry, it is hard to say that realization is impossible. Today's AI benchmarking tools only quantitatively analyze performance and computation speed, not qualitatively. Lablup's goal is to create technology that aggregates and identifies this using any unit or method.

Lablup's technology spans hardware and software, and they have partnerships across the industry. If they establish standards for quantifying AI, they will be in the most advantageous position. It is hoped that Lablup's new goal will establish a new standard for evaluating AI and further become a foundation for the overall growth of AI companies.

IT Donga Reporter Nam Si-hyun (sh@itdonga.com)

Shin Jeong-kyu, CEO of Lablup, announced the opening of Lablup's fifth conference / Source=IT Donga

Shin Jeong-kyu, CEO of Lablup, began his presentation by stating, “In recent years, the AI field has undergone a transformation akin to an industrial revolution. Today, various entities are active in the AI industry, ranging from small companies handling small AI models to those challenging global pipelines or artificial general intelligence. Lablup is building services with the intention to support all areas from AI edge to hyperscale.”

The ultimate goal is to quantitatively measure intelligence demand

The keynote speech was an opportunity to share a blueprint for Lablup's past 10 years and plans for the next 10 years. CEO Shin stated, “For the past 50 years, humans have been replacing knowledge work through computers, and today we have reached a stage where we search and research materials using computers instead of libraries. Since 2022, deep learning-based generative AI models have started gaining attention, and since GPT-3, there is an attempt to replace human creative activities.” He also mentioned that humanity has always devised ways to measure, evaluate, and quantify new technologies as they emerge.

Lablup launched two new services, Continuum and AI:DOL, through this conference / Source=IT Donga

For instance, the physical power exerted by a horse is called '1 horsepower.' Despite the variance in power among horses, it has been standardized and utilized in industrial sites worldwide. Thanks to this, the physical power units of cars or engines can be expressed in horsepower and quantified in terms of tens or hundreds of times. CEO Shin believes that if AI processing results can be measured as quantifiable indicators like 'intelligence demand' or 'intelligence consumption,' it would not only objectively indicate performance but also contribute to the step-by-step performance improvement of AI.

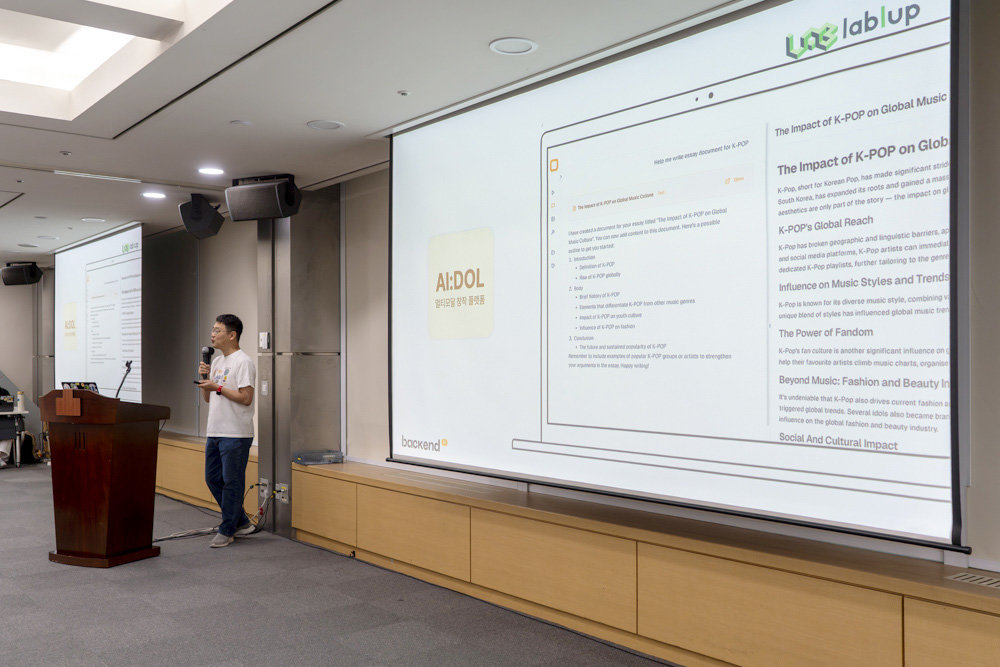

AI:DOL is an omnimodal-based generative AI development platform / Source=IT Donga

The background for setting such a goal lies in Lablup's characteristics. They are positioned at the center of hardware and software. CEO Shin stated, “Lablup quickly identifies the characteristics of AI semiconductors and quantitatively measures performance more than any other company. We partner with many developers and companies to define how to supply and mass-produce AI and focus on building platforms.”

He continued, “In 2023, we released platforms such as PALI and PALANG, and this year we are supporting the market through the fault-tolerant (focused on normal operation despite errors) LLM and multimodal automation management system 'Continuum' and the omnimodal-based generative AI development platform 'AI:DOL.'”

Additionally, Lablup's core service, Backend.AI version 25.14, is evolving with hardening features and solutions like Backend.AI Doctor for automatic diagnosis and recovery. CEO Shin expressed, “While it is difficult to foresee the next 10 years, we can clearly see two years ahead. ChatGPT, which was prevalent in 2023, is now realized at the smartphone level. Such developments will be realized in smaller infrastructure or fields, while hyperscalers will grow larger. Lablup aims to become a company that develops, supplies, and quantifies intelligence in an era of various scales and platforms.”

Preparing for the era of composable AI··· Offering solutions with Backend.AI

Hundreds of developers from Lablup's partner companies and related companies attended the event / Source=IT Donga

Kim Jun-ki, Lablup's Chief Technology Officer (CTO), shared Lablup's field experiences and the latest updates under the theme 'Composable AI, Composable Software.' CTO Kim explained, “Recently, various cases utilizing composition have emerged, especially centered around the AI coding field. This is why Lablup emphasizes the keyword 'composable.' In the future, composable structures will become very important in the computing industry.”

He continued, “From a developer's perspective, composability is akin to conceptualizing programs through abstraction methods (a programming technique based on core content), and when focusing on AI, it is about how to quantify and graft the functions required by the service.

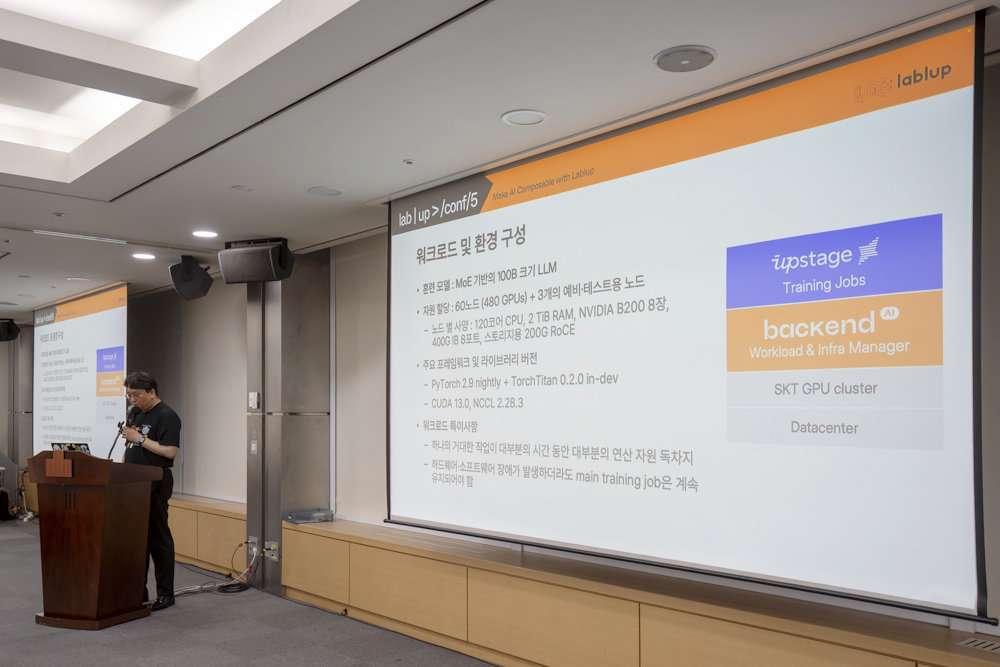

Kim Jun-ki, Lablup's CTO, is presenting on national AI foundation cooperation matters / Source=IT Donga

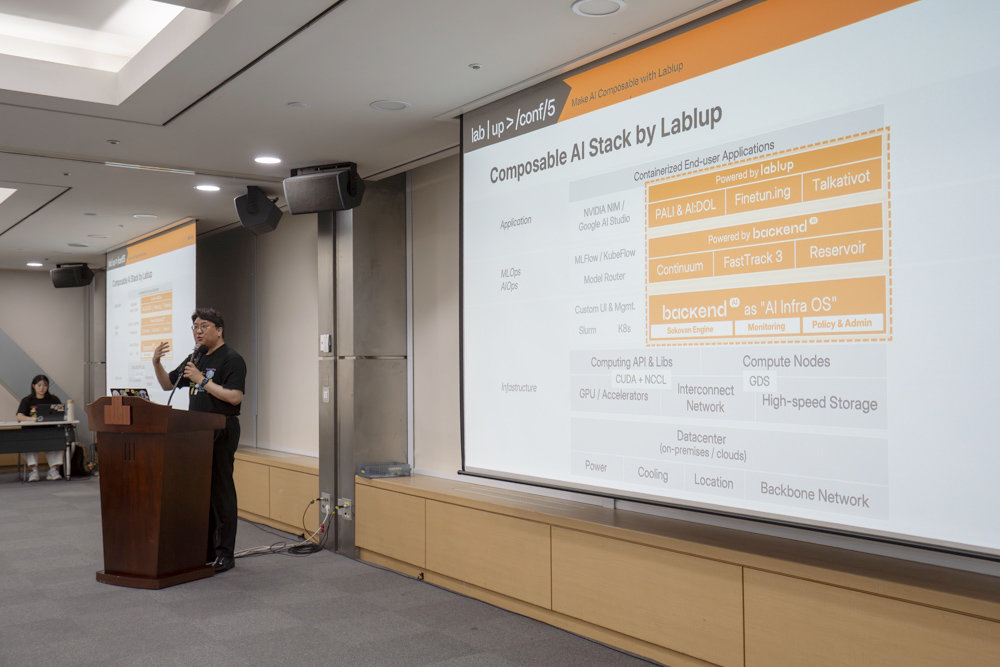

CTO Kim states that achieving composable AI requires both horizontal expansion and vertical integration. Horizontal expansion can be achieved by optimizing tasks composed of hundreds or thousands of containers across dozens to hundreds of nodes or through advanced scheduling. Vertical integration implies the need for comprehensive access to storage, infrastructure, and server connectivity throughout the program.

A case that well demonstrates horizontal expansion and vertical integration is the independent AI foundation project in which Lablup is participating. Lablup is currently supporting the construction of a Korean AI through the Upstage consortium, providing Backend.AI as a distributed deep learning training platform. Depending on the continuity of the project, they plan to continue cooperation in advancing GPU virtualization technology and LLM evaluation and verification. Lablup is approaching the management of infrastructure for the national AI foundation through 'horizontal expansion.'

Lablup aims to open the era of 'composable AI' through the two elements of vertical integration and horizontal expansion / Source=IT Donga

CTO Kim stated, “The model currently being built is an MoE (Mixture of Experts) based LLM with 100 billion parameters. A cluster of 504 NVIDIA B200s is mobilized for training. During the work, unexpected errors or operation stops occur, and problems arise in storage. To solve the problem, tasks such as rewriting thousands of schedulers were organized by reusing the existing process if there were no issues when attempting the same task.” This approach, which considers all environmental variables of hardware and technically consolidates AI infrastructure technology and services, is horizontal expansion.

Vertical integration encompasses the entire process from the hardware or driver at the end to the interface of the final user. It involves connecting special CPU structures and GPUs, mobilizing them for computation tasks, and managing libraries or drives comprehensively. Ultimately, consolidating horizontal expansion and vertical integration is necessary to advance towards composable AI in the future.

CTO Kim stated, “If AI agents are to communicate with each other and handle larger-scale AI in the future, GPUs must be well-managed and optimized. Confidential computing, which has been commercialized in the cloud industry, should now be applied to the AI industry, and costs should be reduced by optimizing with disaggregation for distributed LLM serving. Lablup will provide vertical integration through Backend.AI and help operate AI most efficiently.”

Rebellions, HyperExcel, Naver Cloud, ETRI, and more participate

As a company that serves as a focal point for AI hardware and software, numerous companies participated in the sessions. Major domestic AI companies such as Nota, Rebellions, Lotte Innovate, Miracom I&C, Modu Research Institute, More, Aim Intelligence, and SK Telecom, as well as cloud content providers like KT Cloud, Microsoft, Naver Cloud, and NHN Cloud, participated.

Kim Hong-seok, head of software architecture at Rebellions, introduces vLLM-related support details / Source=IT Donga

Rebellions participated with Kim Hong-seok, head of software architecture, introducing the vLLM support method and technology support ecosystem based on Rebellions' Neural Processing Unit (NPU). vLLM is a toolset for LLM inference and service operation. Rebellions NPU can use various functions of vLLM without separate code modification and supports various MoE models (Mixture of Experts) necessary for enhancing the performance and efficiency of LLM through the AI open-source library platform 'Hugging Face.' They also plan to assist hardware support in the form of vLLM plugins in the future.

Jinwon Lee, CTO of HyperExcel, candidly presents on experiences in AI semiconductor development / Source=IT Donga

HyperExcel participated with CTO Jinwon Lee presenting on the theme 'Making Semiconductors for AI.' Although there was no specific product news, he mainly mentioned what they focus on in AI semiconductor development. The LLM inference process is divided into a prefill stage, which requires a lot of computation, and a decode stage, where memory performance is important. However, the problem is that current-generation GPUs have a poor power consumption-to-performance ratio for handling this.

Moreover, with the emergence of AI agents, conversation lengths are expected to increase, and the frequency of real-time processing of interactions is expected to rise. This means that demand may be distributed to GPUs specialized in computation or AI semiconductors specialized in inference. HyperExcel is configuring products to be advantageous for tasks with frequent memory access, and other AI semiconductor companies must secure competitiveness by strengthening their unique strengths.

In the cloud industry, Justin Yoo, Senior Cloud Advocate at Microsoft, presented on 'Operating ChatGPT in a Closed Network,' and Naver Cloud's Park Bae-seong, AICS Tech Leader, introduced acceleration technologies for running LLMs quickly and cheaply in various scenarios. NHN Cloud's Jung Min, GPU System Engineer, introduced the construction of high-performance computing infrastructure and the overall system operation and construction of NHN Cloud.

Lablup aims to become a company that quantifies and supplies intelligence

Lablup's next 10 years aim towards an era where AI can be quantified / Source=IT Donga

CEO Shin stated, “When we founded Lablup 10 years ago, some laughed when we said we would contribute to technology that could replace human intellectual and creative activities. But now everyone knows that is not the case. Although the challenge is abstract, the goal for the next 10 years is to become a company that supplies intelligence.”

Quantifying intelligence and knowledge is theoretical. However, considering the recent pace of development in the AI industry, it is hard to say that realization is impossible. Today's AI benchmarking tools only quantitatively analyze performance and computation speed, not qualitatively. Lablup's goal is to create technology that aggregates and identifies this using any unit or method.

Lablup's technology spans hardware and software, and they have partnerships across the industry. If they establish standards for quantifying AI, they will be in the most advantageous position. It is hoped that Lablup's new goal will establish a new standard for evaluating AI and further become a foundation for the overall growth of AI companies.

IT Donga Reporter Nam Si-hyun (sh@itdonga.com)

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News