Reporters on The Dong-A Ilbo IT Science Team introduce noteworthy technologies, trends, and companies in the fields of IT, science, space, and bio. “What kind of company is this?” Behind-the-scenes stories of tech companies changing the world with technology! From ideas that stunned the world to the current concerns of founders, this series delves into everything readers have been curious about.

Elon Musk, CEO of Tesla, has announced the merger of SpaceX, the space company he founded, and his artificial intelligence (AI) company xAI. Marking the merger of the two firms, Musk declared that he would “build a space AI data center.” SpaceX has already submitted to U.S. authorities a plan to launch 1 million rockets to place a space data center in Earth’s orbit. Musk has even put forward a specific timeline, saying, “Within two to three years, space will be the cheapest place for AI computation.”

After the news broke, Matt Garman, CEO of Amazon Web Services (AWS), which is building large-scale data centers on the ground, poured cold water on the idea, saying, “It is not economical because it costs a tremendous amount of money to send payloads into space,” and “Space data centers are still a long way off.” Scientists also raise skepticism, pointing to the technological and physical limits of space data centers. Why, then, is Musk talking about investing astronomical sums to put data centers into space? And is it actually feasible?

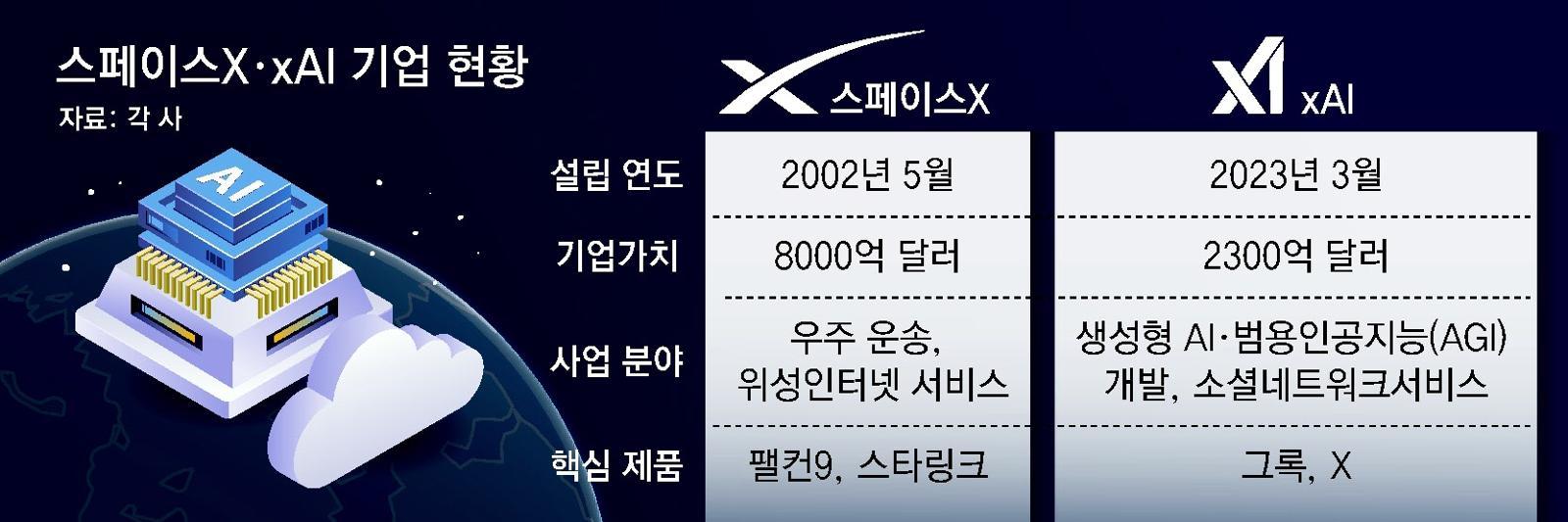

Status of SpaceX and AI companies

Terrestrial data centers are reaching their limits

As countless tech companies worldwide have rushed to develop AI, “data centers” to run AI have emerged as critical infrastructure. For AI to learn knowledge and perform inference to answer users’ questions, it must perform an immense number of calculations at tremendous speed. When a user submits a single question to ChatGPT or Gemini, high-performance graphics processing units (GPUs) in the background consume electricity as they handle complex computations in real time. Such computing loads are difficult for ordinary servers or computers to support, making it necessary to have “AI-dedicated factories” where power sources and cooling functions are concentrated in one place. These factories are AI data centers.

The problem is that AI data centers require so much power that they are straining the power grids humanity has used to date. The total amount of electricity estimated to be consumed in training AI models such as ChatGPT is 50,000–60,000 MWh (megawatt-hours). This is said to be roughly equivalent to the annual power consumption of 20,000 households. As AI models advance and new versions are released, the amount of electricity consumed continues to increase. Around the world, there are hasty efforts to expand power grids to meet the electricity demand of AI data centers, but supply is failing to keep up with demand.

There is also the issue of improving power efficiency by managing the “heat generation” from GPUs that are engaged in constant computation. If this heat is not effectively controlled, AI data centers may fail to deliver their intended performance or be damaged. Cooling only the air in a data center is no longer sufficient, and scientists are experimenting with immersing data centers in liquids such as seawater or oil for cooling. There are even claims that answering a single question on ChatGPT requires as much water as a small bottle of mineral water. On top of this, operators must secure large tracts of land to build massive data centers, among many other challenges.

An employee inspects an overheated server at a Google data center in The Dalles, Oregon, United States. Captured from Google’s website

Space data centers as an excellent theoretical alternative

The limits of terrestrial data centers are precisely why some are seeking to move data centers into space. Space has an energy source that is virtually infinite: the Sun. Unlike on Earth, where solar power generation becomes less efficient on cloudy days, space has no atmosphere and no nights when the Sun disappears. Once a satellite equipped with a data center reaches the appropriate orbit, it can receive an uninterrupted power supply from the Sun 365 days a year. All the downsides typically cited for solar power become irrelevant in outer space.

In space, there is no need to use thousands of tons of water to cool data centers. Space is an ultra-low-temperature environment, close to minus 270 degrees Celsius. SpaceX believes that, if this is effectively utilized, the space environment itself can serve as a massive cooling system. Another advantage is that it becomes possible to sidestep the complex procedures required on Earth—such as securing land, obtaining building permits, conducting environmental impact assessments, and obtaining consent from local residents—to build data centers. “Theoretically,” space data centers are an excellent alternative to terrestrial data centers, which are approaching saturation.

Captured from the SpaceX website

Numerous practical constraints must be overcome

In reality, however, space data centers face a host of hurdles. The first issue is “cost,” as mentioned by CEO Garman. Even though SpaceX has rocket reusability technology and has already launched thousands of satellites into Earth’s orbit, launching a massive data center into space would still require astronomical expenditures. If those costs exceed those of constructing, operating, and maintaining data centers on the ground, even Musk would have little incentive to build data centers in space.

The second constraint is, ironically, “cooling.” Space is an ultra-low-temperature environment, at around minus 270 degrees Celsius, but because it is a vacuum, heat is not transferred. Just as a thermos retains the heat of boiling water inside because that heat is not easily released, the ultra-cold environment of space could trap the heat generated by data centers. For this reason, some have suggested using “radiative cooling” methods that dissipate thermal energy as infrared radiation. However, this method has so far been applied only on a small scale in places like space stations, and critics say its cooling efficiency is not yet high enough to manage the heat generated by data centers.

The final challenge is operational difficulty. On Earth, when a data center breaks down, technicians can be dispatched at any time to repair it. In space, however, repairing a malfunctioning data center is next to impossible. It is also necessary to assess whether it is possible to launch a sufficient number of data centers to perform meaningful AI computation. Musk has said he would launch 1 million satellites, but this figure likely includes some padding. Satellite companies typically apply for permission to launch more satellites than they actually plan to deploy.

In addition, more than 9,000 satellites from SpaceX alone have already been placed in low Earth orbit, and China has also launched hundreds of its own standard satellites, prompting concerns that low Earth orbit is rapidly becoming saturated. No matter how vast space is, operators would have to accept the risk that data centers painstakingly launched into space could be rendered useless by collisions with other satellites.

A photo posted on X by Ezra Failldon, Chief Technology Officer (CTO) of Starcloud. “Gemma,” an artificial intelligence (AI) model trained in a space data center operated by Starcloud, sent back a response to Earth that began with the phrase, “Hello, Earthlings!” Captured from CTO Failldon’s X

Space data center experiments already under way

Despite these issues, experiments to commercialize space data centers are already in progress. Starcloud, a U.S. startup backed by Nvidia, succeeded in test-running what it calls the world’s first space data center in December last year. An AI model completed training aboard a refrigerator-sized satellite data center equipped with Nvidia’s high-performance GPUs and orbiting in low Earth orbit, and it sent back a response to a question transmitted from Earth that began with the phrase, “Hello, Earthlings!” Starcloud plans to launch additional satellites with progressively larger sizes and higher computing performance.

Moves by other big tech players competing with SpaceX are also taking shape. Google is planning to start launching satellites in 2027 to test space data centers. Blue Origin, the space company founded by Amazon founder Jeff Bezos, is also exploring the feasibility of space data centers, operating an organization dedicated to research on the relevant technologies.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News