IT / AI Security

Fighting Deepfakes: From AI Sheriff to Digital DNA Trading

Dong-A Ilbo |

Updated 2026.01.28

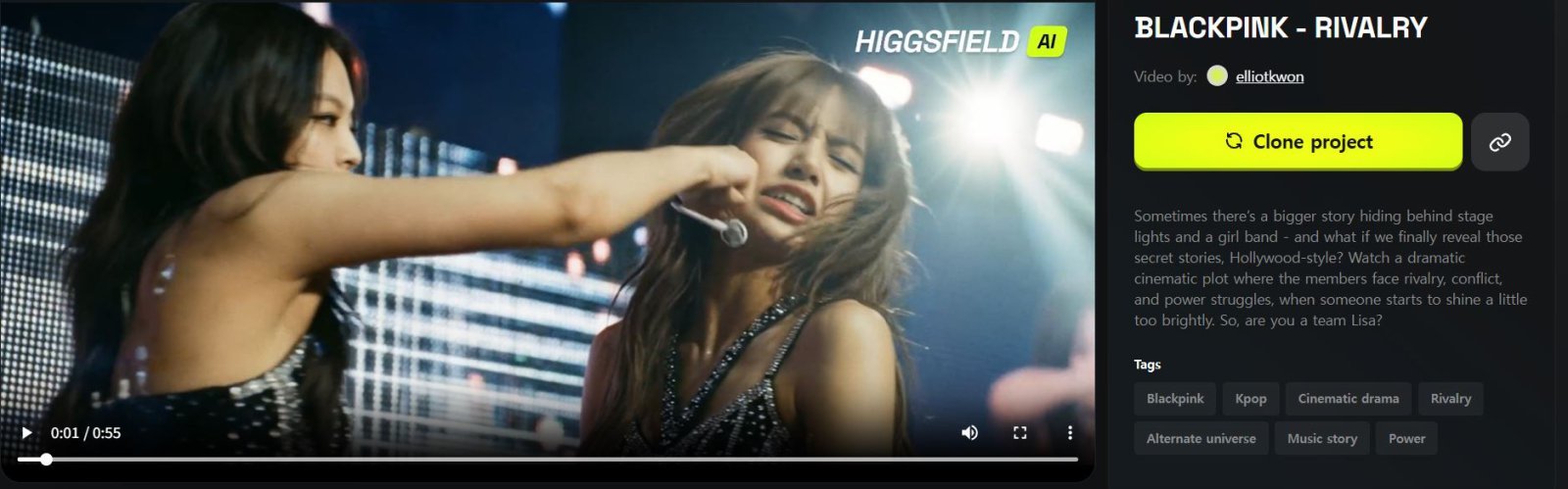

A video showing BLACKPINK members roughly shoving and hitting each other recently went viral online. The physical altercation ended in front of an envelope of cash held out by a man in a suit. From fleeting facial expressions to muscle movements, the footage was so vivid it was almost indistinguishable from reality, but it was in fact a sophisticated fake created by artificial intelligence (AI). Produced without the artists’ consent or even their agency’s knowledge, the video starkly illustrated how easily technological advancement can undermine individual dignity.

● An era of fakes more real than reality

Until very recently, the prevailing view was that “AI videos look awkward,” but that has already become outdated. AI technologies capable of reproducing everything from cinematic lighting to natural camera work are shaking up the landscape of the advertising and film industries. Yet behind this technological progress lies a chilling reality in which a replica that is “more like me than I am” infringes on personal rights and siphons off revenues.

The profit that producers can gain is far too great to be generously dismissed as mere “fan content.” In a digital ecosystem where view counts translate directly into revenue, celebrities’ faces are among the most powerful commercial tools.

Such deepfake issues are not confined to public figures. Ordinary people who post photos on social media as part of daily life can at any time become targets of deepfake pornography or voice phishing scams. In December last year, Elon Musk’s AI company xAI added an image editing function to its AI chatbot “Grok,” sparking controversy because it could easily transform photos posted on the web into images of people in their underwear and expose them to other users on the social network service X.

The European nonprofit organization “AI Forensics” analyzed 200,000 random images generated by Grok between 25 December last year and 1 January this year, and reported that 53% depicted people wearing only minimal clothing such as underwear or bikinis, with 81% of these images appearing to feature women.

● “Deepfakes, freeze!” The rise of AI sheriffs

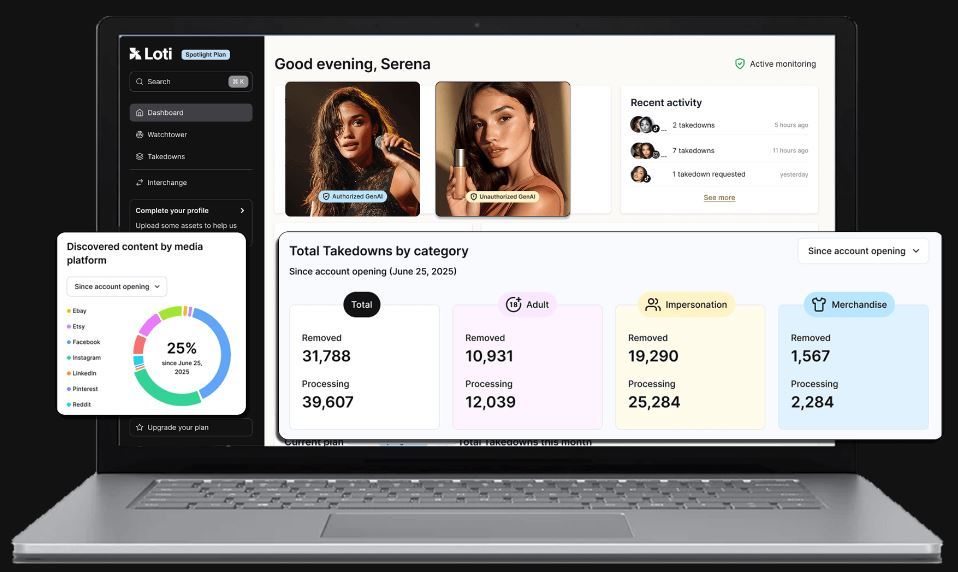

For individuals, it is nearly impossible to identify one by one where their portrait rights are being misappropriated or abused in the blind spots of the web. Sensing this anxiety and turning it into a business opportunity, U.S.-based LOTI AI has emerged. LOTI AI presents itself as an “AI sheriff,” specializing in the detection and removal of deepfakes.

Based on photos, videos, and audio data provided by users, LOTI AI monitors the web in real time to track down illicitly distributed deepfake videos and impersonation accounts. When abuse is detected, its automated system immediately files takedown requests with platforms.

A LOTI AI representative stated, “It is already difficult for individuals to manually track and report harmful content one by one, but a bigger problem is that while they anxiously wait for an image to be removed, the same image can be uploaded to another platform,” adding, “Our goal is to delete 95% of harmful content within 17 hours by using our web monitoring and automated takedown request solution.” In effect, LOTI AI’s business is “digital quarantine”: using technology to defend against damages caused by technology.

● Rights and assets: the emergence of ‘digital DNA’

There are also moves to go a step further and actively convert digital portrait rights into a revenue model. KDDC (Korea Digital DNA Center), a subsidiary of VFX (visual effects) specialist M83, has begun work to turn an individual’s “digital DNA” into that person’s official IP (intellectual property), so that it can be used as a legitimate material in AI content production.

KDDC uses a 3D scanner equipped with more than 100 cameras to scan in detail a person’s appearance, facial expressions, voice, and manner of speaking, and stores this information in a database. Its goal is to establish a system under which only registered data are authorized for legitimate use, while unregistered composites are identified as illegal.

Jeong Ui-seok, CEO of KDDC, explained, “Even if an advertising model is not physically present on set, it is possible to produce advertisements and settle revenues using that person’s digital DNA.” KDDC is currently working with the Korea Entertainment Management Association to build the digital DNA of celebrities affiliated with the association.

● “Digital portrait rights require legal and institutional reform”

Having built experience in the film industry as head of Vantage Holdings, the investment and production company behind films such as “The Chaser” and “Crush and Blush,” CEO Jeong forecasts that the video ecosystem will undergo a fundamental reorganization due to AI within the next four to five years. He stressed that, prior to this shift, a legal and institutional framework for digital portrait rights must be established in order to foster a healthy video industry ecosystem.

Under the current legal system, digital portrait rights are not explicitly defined. Traditional portrait rights have been interpreted mainly around acts of photographing and publishing, and there are no specific criteria for applying them to digitally synthesized images created by AI technologies.

There is also a legal vacuum from the perspective of copyright law. Copyright law defines a work as a creative output that expresses “human thought or emotion,” and therefore, as a rule, AI-generated outputs are not recognized as works. Only where an actual human author’s creative contribution through substantive instructions is acknowledged can such outputs be granted limited copyright protection.

Jeong said, “We are entering an era in which anyone can generate content thanks to the popularization of AI technologies, but in the absence of a supporting rights framework, the legal ownership of generated outputs will inevitably become a matter of dispute after the fact,” adding, “There is an urgent need to build the legal and institutional infrastructure that will both protect individuals’ moral and property rights and make the creative ecosystem sustainable in the AI era.”

A brawl between BLACKPINK members generated by AI. A user of a generative AI platform created and shared the video on the platform to test the AI’s performance.

● An era of fakes more real than reality

Until very recently, the prevailing view was that “AI videos look awkward,” but that has already become outdated. AI technologies capable of reproducing everything from cinematic lighting to natural camera work are shaking up the landscape of the advertising and film industries. Yet behind this technological progress lies a chilling reality in which a replica that is “more like me than I am” infringes on personal rights and siphons off revenues.

An Instagram user generated selfie-style videos that appear to have been taken with Hollywood stars and posted them on a self-operated channel, garnering more than 100 million views (faces are pixelated).

An Instagram user who operates a personal channel posted selfie-style videos seemingly taken with Hollywood stars and secured more than 100 million views. The user hinted that the videos were AI-generated, saying, “Taking selfies with celebrities seems to be trending these days, so I joined in my own way.” The issue, however, is that there was no process of obtaining permission for portrait rights or paying appropriate compensation.The profit that producers can gain is far too great to be generously dismissed as mere “fan content.” In a digital ecosystem where view counts translate directly into revenue, celebrities’ faces are among the most powerful commercial tools.

Such deepfake issues are not confined to public figures. Ordinary people who post photos on social media as part of daily life can at any time become targets of deepfake pornography or voice phishing scams. In December last year, Elon Musk’s AI company xAI added an image editing function to its AI chatbot “Grok,” sparking controversy because it could easily transform photos posted on the web into images of people in their underwear and expose them to other users on the social network service X.

The European nonprofit organization “AI Forensics” analyzed 200,000 random images generated by Grok between 25 December last year and 1 January this year, and reported that 53% depicted people wearing only minimal clothing such as underwear or bikinis, with 81% of these images appearing to feature women.

● “Deepfakes, freeze!” The rise of AI sheriffs

For individuals, it is nearly impossible to identify one by one where their portrait rights are being misappropriated or abused in the blind spots of the web. Sensing this anxiety and turning it into a business opportunity, U.S.-based LOTI AI has emerged. LOTI AI presents itself as an “AI sheriff,” specializing in the detection and removal of deepfakes.

Based on photos, videos, and audio data provided by users, LOTI AI monitors the web in real time to track down illicitly distributed deepfake videos and impersonation accounts. When abuse is detected, its automated system immediately files takedown requests with platforms.

U.S.-based LOTI AI tracks deepfakes or impersonation accounts on the web using clients’ photo, video, and audio data.

A LOTI AI representative stated, “It is already difficult for individuals to manually track and report harmful content one by one, but a bigger problem is that while they anxiously wait for an image to be removed, the same image can be uploaded to another platform,” adding, “Our goal is to delete 95% of harmful content within 17 hours by using our web monitoring and automated takedown request solution.” In effect, LOTI AI’s business is “digital quarantine”: using technology to defend against damages caused by technology.

● Rights and assets: the emergence of ‘digital DNA’

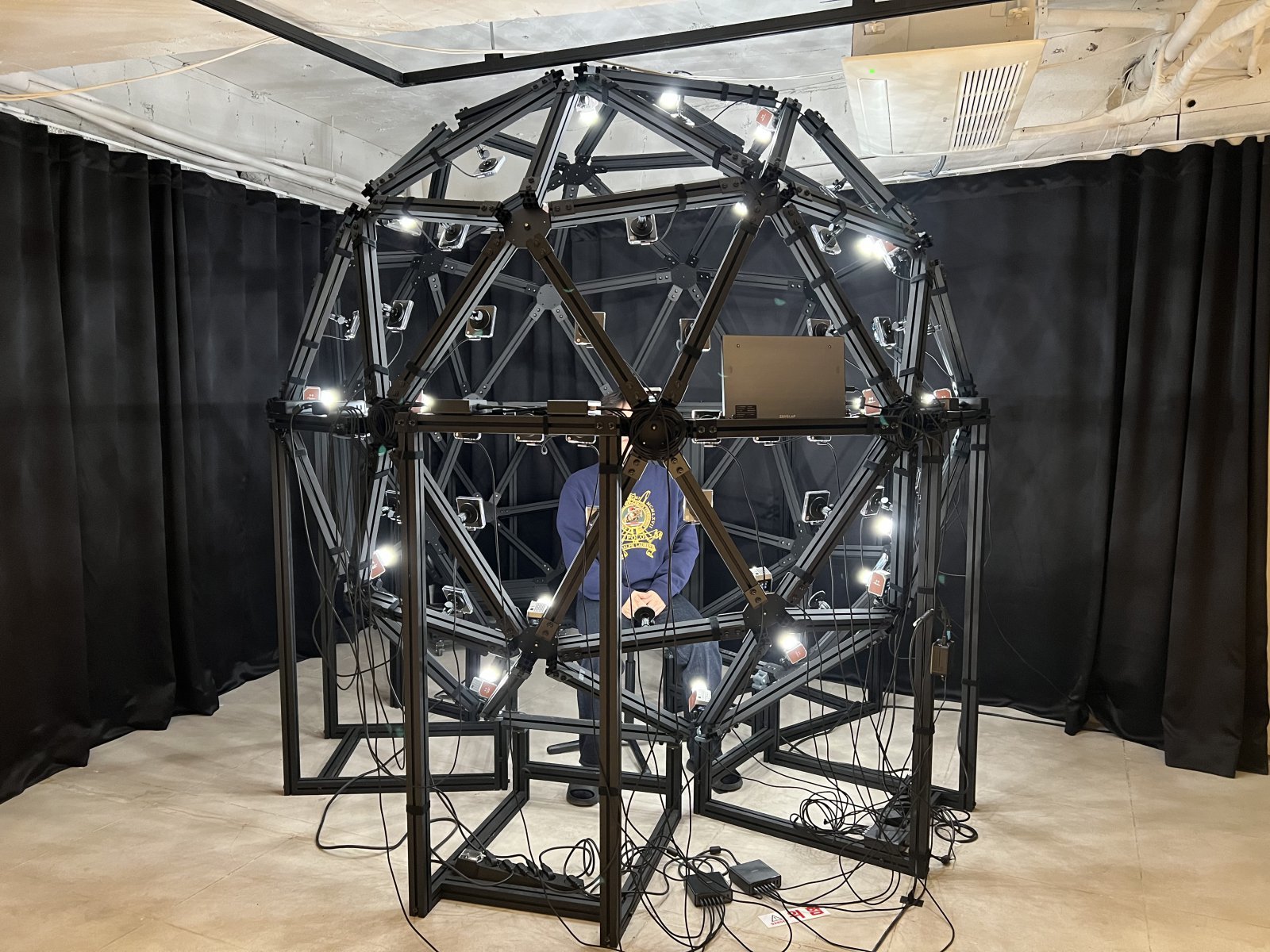

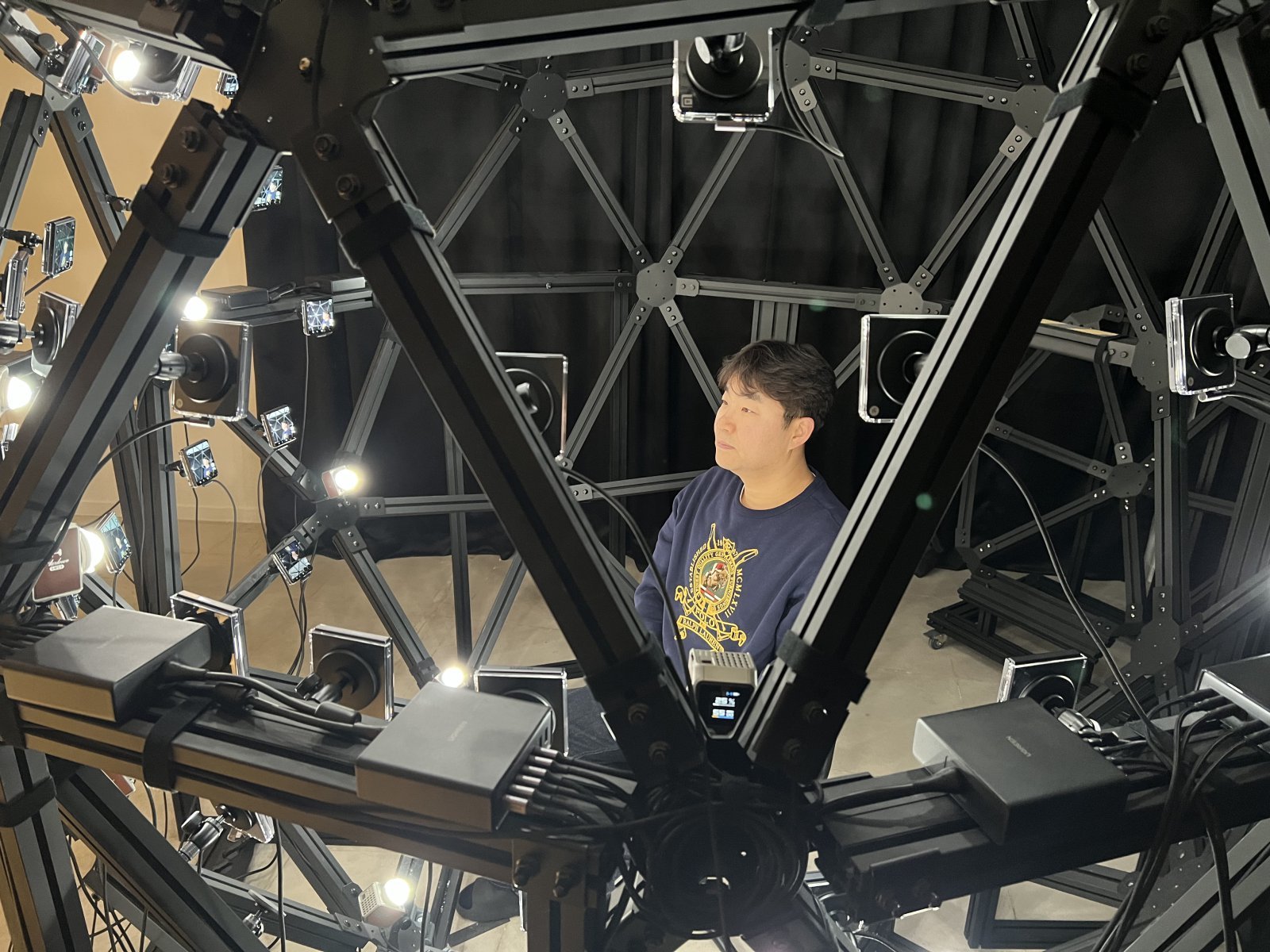

There are also moves to go a step further and actively convert digital portrait rights into a revenue model. KDDC (Korea Digital DNA Center), a subsidiary of VFX (visual effects) specialist M83, has begun work to turn an individual’s “digital DNA” into that person’s official IP (intellectual property), so that it can be used as a legitimate material in AI content production.

KDDC uses a 3D scanner equipped with more than 100 cameras to scan in detail a person’s appearance, facial expressions, voice, and manner of speaking, and stores this information in a database. Its goal is to establish a system under which only registered data are authorized for legitimate use, while unregistered composites are identified as illegal.

Jeong Ui-seok, CEO of KDDC, explained, “Even if an advertising model is not physically present on set, it is possible to produce advertisements and settle revenues using that person’s digital DNA.” KDDC is currently working with the Korea Entertainment Management Association to build the digital DNA of celebrities affiliated with the association.

A 3D scanner that records a person’s appearance, facial expressions, and gestures with more than 100 digital cameras and stores them as digital data. KDDC (Korea Digital DNA Center) says this data can serve as the foundation for legitimate exercise of digital portrait rights. Courtesy of KDDC.

● “Digital portrait rights require legal and institutional reform”

Having built experience in the film industry as head of Vantage Holdings, the investment and production company behind films such as “The Chaser” and “Crush and Blush,” CEO Jeong forecasts that the video ecosystem will undergo a fundamental reorganization due to AI within the next four to five years. He stressed that, prior to this shift, a legal and institutional framework for digital portrait rights must be established in order to foster a healthy video industry ecosystem.

Under the current legal system, digital portrait rights are not explicitly defined. Traditional portrait rights have been interpreted mainly around acts of photographing and publishing, and there are no specific criteria for applying them to digitally synthesized images created by AI technologies.

There is also a legal vacuum from the perspective of copyright law. Copyright law defines a work as a creative output that expresses “human thought or emotion,” and therefore, as a rule, AI-generated outputs are not recognized as works. Only where an actual human author’s creative contribution through substantive instructions is acknowledged can such outputs be granted limited copyright protection.

Jeong said, “We are entering an era in which anyone can generate content thanks to the popularization of AI technologies, but in the absence of a supporting rights framework, the legal ownership of generated outputs will inevitably become a matter of dispute after the fact,” adding, “There is an urgent need to build the legal and institutional infrastructure that will both protect individuals’ moral and property rights and make the creative ecosystem sustainable in the AI era.”

Kim Hyun-ji

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News