FuriosaAI

FuriosaAI Begins Mass Production of RNGD NPU, Targets Global Market

Dong-A Ilbo |

Updated 2026.01.28

AI semiconductor specialist FuriosaAI is accelerating mass production of its second-generation neural processing unit (NPU), RNGD (Renegade), and launching an offensive on commercialization and hyperscaler customers. Since first unveiling RNGD at the semiconductor design conference “Hot Chips 2024” in the United States in August 2024, FuriosaAI has been setting up business contracts and cooperation frameworks on multiple fronts, and will begin product deliveries with this mass production run. The initial production batch consists of 4,000 units: TSMC, as foundry partner, manufactures the chips and Asus produces the cards for shipment.

FuriosaAI had already accumulated mass production experience with its first-generation NPU, Warboy, and the second-generation RNGD has also entered volume production smoothly. Since the product announcement, FuriosaAI has focused on hardware stabilization and enhancement of its software stack to strengthen product competitiveness, building use cases such as confirmed adoption of RNGD for the Exaone model at LG AI Research and a public demonstration of OpenAI’s gpt-oss 120B model running on its hardware.

With successful mass production of data center and enterprise-grade products, FuriosaAI is expected to compete with global AI accelerators such as Groq LPU, Tenstorrent Wormhole and Blackhole, Cerebras WSE-3, and SambaNova SN40L RDU.

The RNGD now in production is supplied in the form of a single-card RNGD PCIe card and as an NXT RNGD server configuration in which eight cards are integrated into a single rack unit. The RNGD PCIe card has a thermal design power (TDP) of 180W, enabling stable air-cooled operation, and delivers 512 teraflops of performance in FP8. It is equipped with 48GB of HBM3 memory and offers up to 1.5TB/s of bandwidth. The PCIe interface is configured as Gen5 x16 lanes.

The NXT RNGD server is a 4U rack-mount system that incorporates eight RNGD PCIe cards and two AMD EPYC processors, with total system power consumption of around 3kW. Up to five servers can be installed in a standard rack, providing up to 20 petaflops of inference performance per rack. It supports 384GB of HBM3 memory and 1TB of DDR5 system memory, and is equipped with a 1G NIC for management and a 25G NIC for data transfer. Both the RNGD PCIe card and the server use the Furiosa SDK and LLM runtime for software support.

RNGD’s performance has already been demonstrated by LG AI Research and other enterprises. In July last year, LG AI Research implemented inference computing for its Exaone model using RNGD. At that time, a single server fitted with four RNGD cards processed the LG Exaone 3.5 32B model with a batch size of 1 at 60 tokens per second for a 4K context window and 50 tokens per second for a 32K context window.

Performance has also been verified through a demo running OpenAI’s 120B model. The gpt-oss-120b model uses MXFP4, a 4-bit floating-point format, and FuriosaAI configured its hardware pipeline to support this format. In the connected demo system, it was optimized to achieve 5.8ms per output token. This suggests that large LLMs at the 100B-parameter level can be operated smoothly in offline environments on RNGD-based systems.

Starting with deliveries from this mass production batch, FuriosaAI plans to make a full-scale push into enterprise and hyperscaler markets. One affiliate of a major domestic conglomerate has already placed a purchase order for RNGD, and a growing number of global companies have completed validation and are now adopting RNGD.

FuriosaAI targets inference semiconductor market with focus on total cost of ownership

The target market for FuriosaAI’s NPU is not to replace GPUs but to coexist with them. In current-generation AI workloads, GPUs are widely used in training environments to build AI models. While GPUs can also be used for inference to run completed AI models, their price-performance ratio, power efficiency, and total cost of ownership are considered excessively poor for this purpose. FuriosaAI therefore envisions a market where GPUs continue to be used for training, while NPUs are introduced for inference.

In particular, RNGD—with a TDP of around 180W—can be operated stably in air-cooled servers and provides 2.5 times higher “throughput per rack” than GPU-based systems in standard environments. This means more AI inference workloads can be processed under the same space and power conditions. FuriosaAI CEO Baek Jun-ho stated, “RNGD mass production is a step forward toward becoming a global top-three AI and top-two semiconductor company,” adding, “We will accelerate our efforts to expand revenue in global markets.”

Reporter Nam Si-hyun, IT DongA (sh@itdonga.com)

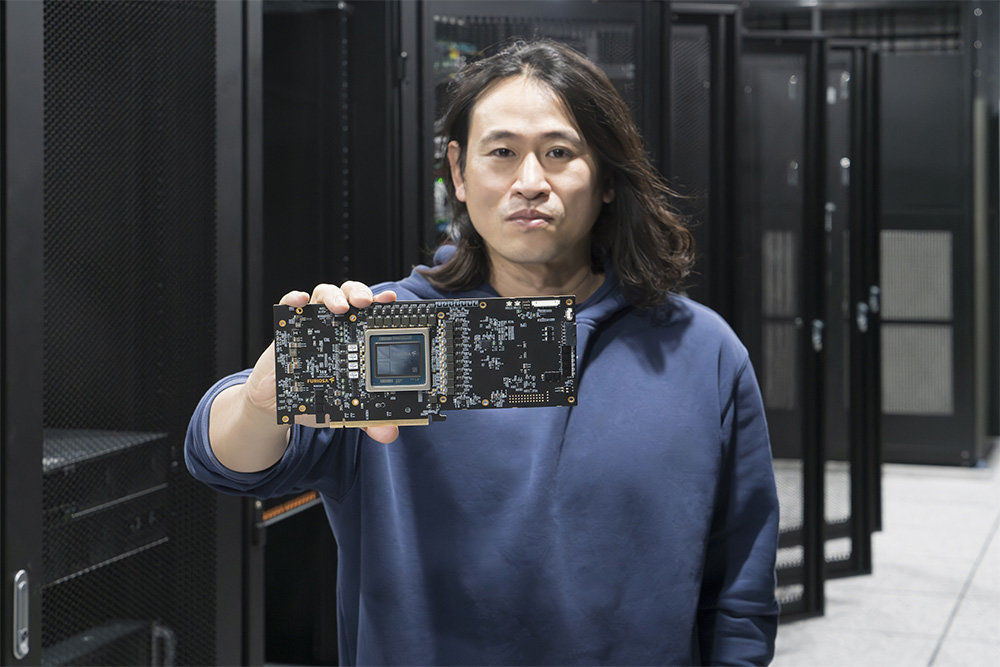

FuriosaAI CEO Baek Jun-ho introduces the second-generation NPU RNGD card / Source=IT DongA

FuriosaAI had already accumulated mass production experience with its first-generation NPU, Warboy, and the second-generation RNGD has also entered volume production smoothly. Since the product announcement, FuriosaAI has focused on hardware stabilization and enhancement of its software stack to strengthen product competitiveness, building use cases such as confirmed adoption of RNGD for the Exaone model at LG AI Research and a public demonstration of OpenAI’s gpt-oss 120B model running on its hardware.

With successful mass production of data center and enterprise-grade products, FuriosaAI is expected to compete with global AI accelerators such as Groq LPU, Tenstorrent Wormhole and Blackhole, Cerebras WSE-3, and SambaNova SN40L RDU.

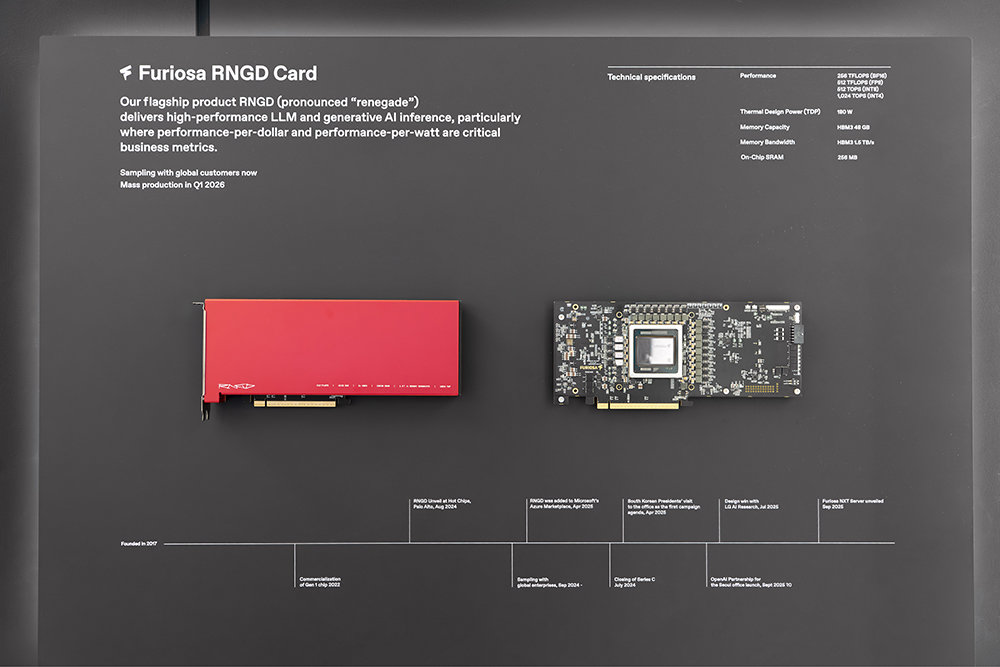

RNGD is sold as a single-card RNGD PCIe product and as an NXT RNGD server equipped with eight cards / Source=IT DongA

The RNGD now in production is supplied in the form of a single-card RNGD PCIe card and as an NXT RNGD server configuration in which eight cards are integrated into a single rack unit. The RNGD PCIe card has a thermal design power (TDP) of 180W, enabling stable air-cooled operation, and delivers 512 teraflops of performance in FP8. It is equipped with 48GB of HBM3 memory and offers up to 1.5TB/s of bandwidth. The PCIe interface is configured as Gen5 x16 lanes.

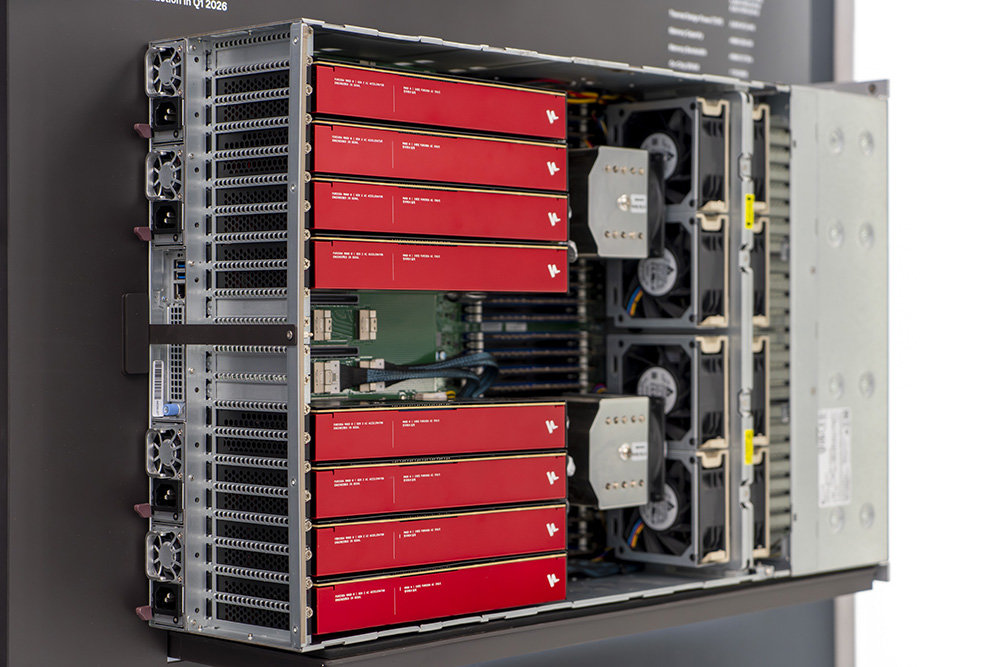

The NXT RNGD server, equipped with eight RNGD cards and two AMD EPYC processors, is a fully assembled server system / Source=IT DongA

The NXT RNGD server is a 4U rack-mount system that incorporates eight RNGD PCIe cards and two AMD EPYC processors, with total system power consumption of around 3kW. Up to five servers can be installed in a standard rack, providing up to 20 petaflops of inference performance per rack. It supports 384GB of HBM3 memory and 1TB of DDR5 system memory, and is equipped with a 1G NIC for management and a 25G NIC for data transfer. Both the RNGD PCIe card and the server use the Furiosa SDK and LLM runtime for software support.

RNGD’s performance has already been demonstrated by LG AI Research and other enterprises. In July last year, LG AI Research implemented inference computing for its Exaone model using RNGD. At that time, a single server fitted with four RNGD cards processed the LG Exaone 3.5 32B model with a batch size of 1 at 60 tokens per second for a 4K context window and 50 tokens per second for a 32K context window.

FuriosaAI implemented the gpt-oss-120b model on-premises using a demo system composed of two RNGD cards / Source=IT DongA

Performance has also been verified through a demo running OpenAI’s 120B model. The gpt-oss-120b model uses MXFP4, a 4-bit floating-point format, and FuriosaAI configured its hardware pipeline to support this format. In the connected demo system, it was optimized to achieve 5.8ms per output token. This suggests that large LLMs at the 100B-parameter level can be operated smoothly in offline environments on RNGD-based systems.

Starting with deliveries from this mass production batch, FuriosaAI plans to make a full-scale push into enterprise and hyperscaler markets. One affiliate of a major domestic conglomerate has already placed a purchase order for RNGD, and a growing number of global companies have completed validation and are now adopting RNGD.

FuriosaAI targets inference semiconductor market with focus on total cost of ownership

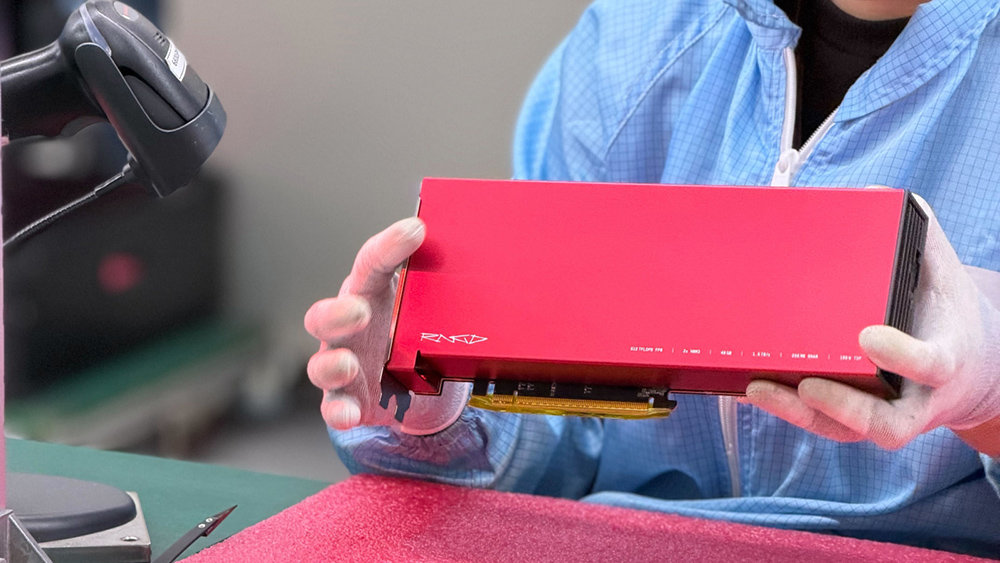

With this mass production run, the company aims to receive delivery of a total of 20,000 RNGD units within the year / Source=FuriosaAI

The target market for FuriosaAI’s NPU is not to replace GPUs but to coexist with them. In current-generation AI workloads, GPUs are widely used in training environments to build AI models. While GPUs can also be used for inference to run completed AI models, their price-performance ratio, power efficiency, and total cost of ownership are considered excessively poor for this purpose. FuriosaAI therefore envisions a market where GPUs continue to be used for training, while NPUs are introduced for inference.

In particular, RNGD—with a TDP of around 180W—can be operated stably in air-cooled servers and provides 2.5 times higher “throughput per rack” than GPU-based systems in standard environments. This means more AI inference workloads can be processed under the same space and power conditions. FuriosaAI CEO Baek Jun-ho stated, “RNGD mass production is a step forward toward becoming a global top-three AI and top-two semiconductor company,” adding, “We will accelerate our efforts to expand revenue in global markets.”

Reporter Nam Si-hyun, IT DongA (sh@itdonga.com)

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News