AI Investment

Korea’s National AI Debuts, Midterm Check on K-AI Status

Dong-A Ilbo |

Updated 2025.12.30

The first presentation of the “Independent AI Foundation Model” project, launched to secure the Republic of Korea’s artificial intelligence (AI) sovereignty, was held on December 30 at COEX in Samseong-dong, Seoul. The Independent AI Foundation Model is a project to build “AI for All” for the state, enterprises, and citizens, with the goal of creating an AI infrastructure that Korea can build and operate independently in global AI competition. In August, the Ministry of Science and ICT selected five consortia—Naver Cloud, Upstage, SK Telecom, NC AI, and LG AI Research Institute—as the “national flagship AI elite teams” and has since been providing infrastructure and project support.

The project aims to achieve at least 95% of the performance of the world’s leading AI models released within the past six months, and through evaluations every six months, it will ultimately select two teams to serve as Korea’s representative AI models. Accordingly, after this presentation, one consortium will be eliminated by January 15. The project budget allocates KRW 62.8 billion to data, KRW 157.6 billion to GPU support, and KRW 25 billion to talent acquisition, with plans to complete the first development phase by the first half of 2026 and release the AI models as open source.

Baek Kyung-hoon, Minister of Science and ICT, said, “The Independent AI Foundation Model is the first gateway Korea must pass through to become one of the world’s top three AI powers. All five consortia are aiming for the highest global standards, and in this, there are no winners or losers. Whatever the outcome, the government will stand with them, and based on those results, we will work with the many companies participating in the consortia to create services and platforms and support them to expand globally.”

He continued, “Korea will establish itself as the AI capital of the Asia-Pacific region, and more AI infrastructure and data centers will be built here. Based on the Independent AI Foundation Model project, the Ministry of Science and ICT plans to support customized AI research across scientific disciplines. Through this, we will complete a Korean version of the Genesis Mission (a large-scale AI project led by the U.S. federal government) and elevate Korea’s science and technology to the next level. This project will drive Korea’s great AI transformation, and you should take pride in being at its starting point.”

Naver Cloud elite team to address complex AI environments with Omni model

Naver Cloud defines good AI as how it solves given problems and maintains stability over time. For Naver, a good model must deliver cost efficiency, high performance, and practicality. These three factors must be understood and function in an integrated way. In addition, from Naver’s perspective, “AI for All” must satisfy three conditions: ▲ Is it easily accessible to anyone? ▲ Does it strengthen the country’s industrial competitiveness? ▲ Does it reach groups that are marginalized from AI first?

To realize this, Naver Cloud chose the “Omni Foundation Model.” The Naver Cloud elite team will open source the first version based on the Omni Foundation Model—the HyperClova X SEED 8B Omni model—and the HyperClova X SEED 32B Think model, which significantly enhances visual and auditory capabilities for inference-based AI, and will move into full-scale implementation of AI agents.

The Omni model is an AI model that can simultaneously understand and generate multiple types of data, including text, images, audio, and video. The HyperClova X SEED Omni models are built from the ground up to understand data in omni format. Sung Nak-ho, Head of Technology, said, “There is no need to separately connect a text-based Large Language Model (LLM) or Optical Character Recognition (OCR) model. The AI itself reads charts directly and organically understands information. When processing information, there is also no need to call multiple models or go through complicated steps, which makes deployment into new environments flexible. To widely apply AI in industrial settings, an omni model is essential to achieve both performance and cost efficiency.”

In terms of performance, the models meet the target of achieving 95% of the latest global models. The Korean-language performance of the HyperClova X SEED 32B Think model is higher than that of InternVL3_5-38B Thinking, a multimodal LLM that adopts native multimodal pre-training. For vision recognition and AI agent execution capabilities, it also outperforms the Qwen3-VL-32B Think model. When given only photos of college entrance exam questions, it automatically recognizes the text with OCR, calculates answers, and achieved top scores (Grade 1) in all subjects except for Korean language (Grade 2). The smaller 8B model also supports omni capabilities, enabling cross-modal input and output of text, images, and audio.

Naver Cloud will use the 32B and 8B models to expand HyperClova’s omni-model business. Sung Nak-ho said, “Rather than building separate AI systems for each industry and service, a more efficient approach is to first complete a large-scale omni model and then derive lightweight variants.” He added that, from an AI agent perspective, Naver will focus on ▲ on-service AI for everyday life ▲ vertical AI tailored to each industry ▲ human-centric inclusive AI.

On-service AI is already being applied to the Naver portal, and in February next year, in cooperation with the Ministry of the Interior and Safety, Naver will provide an electronic certificate agent and a GongyooNuri reservation agent via Naver TalkTalk. “Naver’s sovereign AI has been built on a growing ecosystem and a full-stack AI architecture,” Sung said. “Naver Cloud aims to extend the benefits of AI to all citizens and expand business opportunities. Through this, we will create AI that improves individual lives and enables industries to grow together.”

NC AI to compete with sector-specific AI built on VAETKI LLM

Lee Yeon-soo, CEO of NC AI, said, “Our definition of industry-specialized AI is whether it can provide domain-specific expertise and be flexibly utilized. At the same time, we support on-premise deployment to protect corporate data, and instead of simply maximizing parameter counts, it is important to provide accurate, evidence-based responses. Together with 14 consortium and partner institutions and more than 40 demand-side organizations, NC AI has built VAETKI, a ‘vertical AI engine’ for core industries.”

VAETKI aims to be used even for physical AI across various industry sectors. Through the first phase of the project, NC AI has secured a 100B-parameter model along with Korean-language data and industry-specific data, and is generating results through 28 industry diffusion projects. For example, with InterX, a specialist in AX (AI transformation) for manufacturing, NC AI supports smart factory transformation of Korean SMEs in manufacturing, and it also collaborates with Hyundai AutoEver on industrial AX. In addition, it is carrying out logistics AX projects for global distributors, including POSCO and Lotte, and is developing specialized models in aviation and construction as well.

In the public sector, NC AI is building multimodal intelligent control and safety ecosystems in four hub cities and is working with the Army AI Center on defense innovation projects. It is also collaborating with the Korea Creative Content Agency and the broadcaster MBC on cultural content.

The completed VAETKI model branches into four variants to ensure efficiency and scalability. CEO Lee explained, “We are responding to a variety of scalability needs with a high-performance 100B-A10 MoE (Mixture-of-Experts) model, diffusion-type 20B-A2B MoE and 7B-A1B MoE models, and a diffusion-type 7B-A1B MoE VLM model that uses a dedicated vision model,” adding, “For data acquisition, we validated stability at up to 20 trillion tokens and focused on securing multimodal and field-specific data. We further refined performance using 14 types of multimodal data, including analysis of the Korean manufacturing industry.”

A key feature of the VAETKI AI engine is its combination with other AI models. “Barco,” which combines the VAETKI LLM with a 3D generation model, builds 3D assets and objects from text input alone, shortening generation work that previously took more than four weeks to under 10 minutes. This can be widely used in cultural content industries and industrial sites. When combined with sound, the LLM automatically infers, generates, and applies appropriate sound effects for a given situation.

Lee said, “Through the first phase, NC AI has built a 100B model. In the second phase, we will develop a 200B model, in the third phase we will focus on industry-specialized and diffusion-type LLMs, and from the fourth phase onward we plan to create lightweight, diffusion-type models based on multi-scaler and multimodal packages. Our policy is to provide AI models quickly and efficiently based on validated architectures. We will also build domain AI for non-experts and contribute to the spread of AI across industries.”

Startup elite team Upstage showcases capabilities with live demo

Kim Sung-hoon, CEO of Upstage, said, “For five years, I have led Upstage with the goal of building innovative AI that benefits everyone. Just as we started developing AI, LLMs emerged and we began developing the Solar LLM, successfully progressing from the initial 10.7B model to Solar Pro 2. Normally, we would now be aiming at the 30B level, but through the Independent AI Foundation Model project, we completed a 100B model,” as he began his presentation.

He continued, “To avoid wasting taxpayer-funded GPUs, we worked with Rablup to jointly optimize the training process. We built a system that automatically detects failures and restarts immediately, reducing recovery time by more than half. By optimizing the kernel and training code, we significantly increased tokens and training volume, enabling more than 200 million training iterations.”

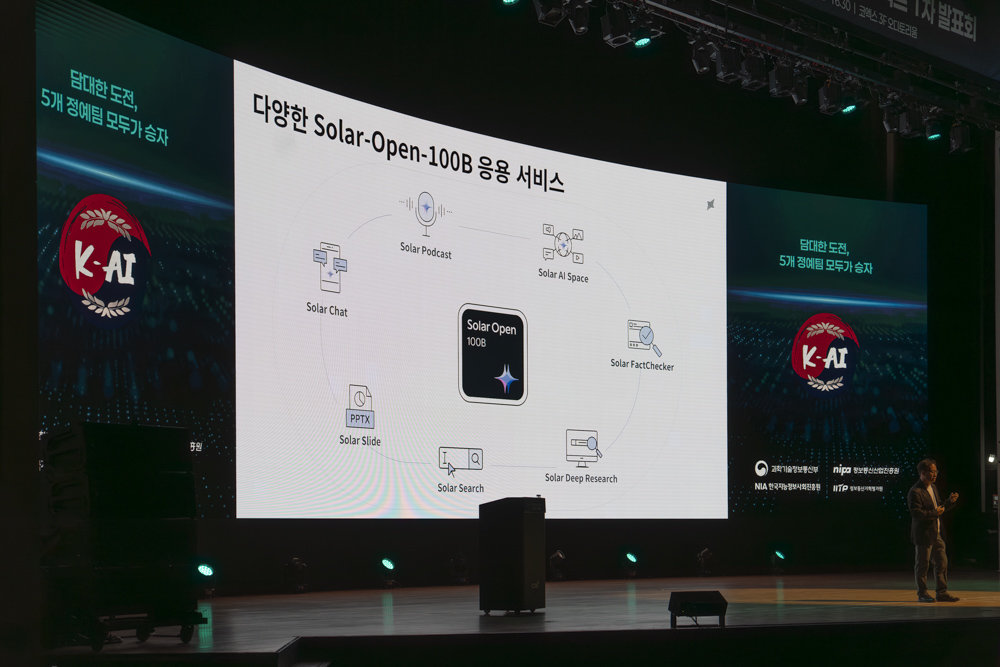

The Solar-Open-100B model has 102 billion parameters, with 10.2 billion parameters activated per query in an MoE structure. Kim said, “We are conducting blind tests against other global models. The model captures subtle nuances and sincerity in Korean and provides concise yet well-ordered explanations,” adding that it also supports complex features such as deep research, as provided by global LLMs, and generation of slides (PPT) and reports. Although major announcements are typically delivered through pre-recorded videos, Kim instead showcased real-time services on-site to underscore the model’s maturity.

The Solar-Open-100B model is publicly available for free on Hugging Face, and is currently being commercialized within the consortium. “Makinarocks uses our model for drafting business plans, VUNO in healthcare, Flitto for ultra-personalized AI translation, and Law&Company in the legal domain,” Kim said. “Nota, Rablup, and Orkestra are also using it for their respective services, and Day1 Company is planning a national hackathon built on the Solar LLM.” He added, “We will also partner with LLM company Allganize for business in Japan.”

Kim concluded, “To share our experience in building AI, Upstage has selected 10 model creators and is making their content freely available. We also provide Solar-Open-100B and all Upstage solutions free of charge to schools and non-profit organizations. Having achieved deep research capabilities with our 100B model, we will not stop here—we aim to reach 200B, 300B, and multimodal models. Together with our consortium partners, we will ensure that Korean AI stands tall on the global stage.”

SK Telecom bets on ultra-large 500B model

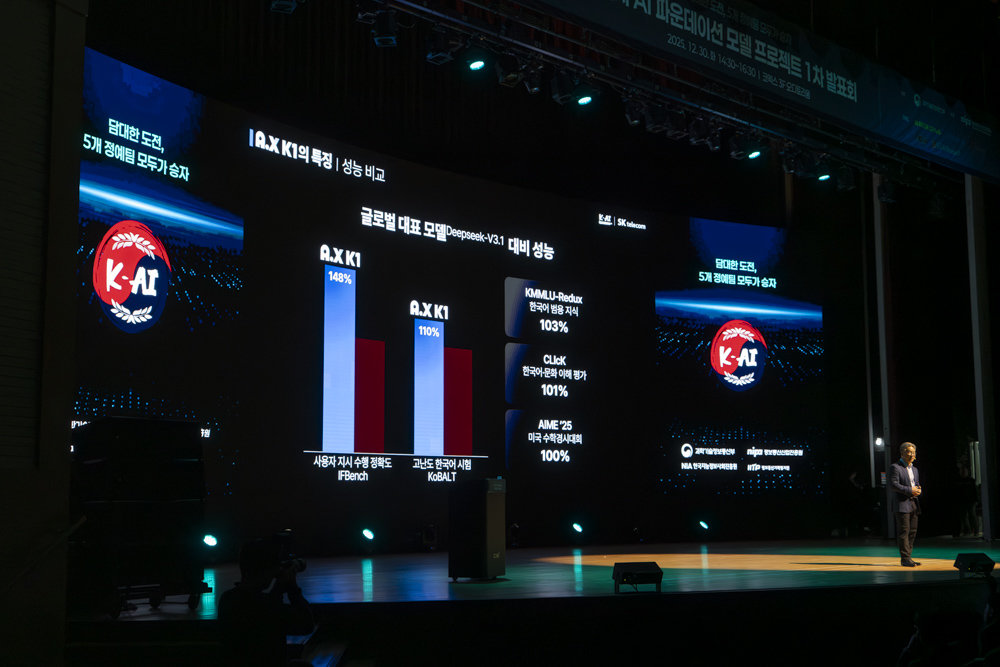

SK Telecom’s elite team includes Krafton, 42dot, Rebellions, LINER, Select Star, Seoul National University’s Industry-University Cooperation Foundation, and KAIST, and participates in the project under the goal of “developing next-generation transformer-based ultra-large models integrating language and multimodal actions, and implementing K-AI services.” In terms of size, the team has built a 500B-parameter model, the largest among the five consortia. Seok-geun Jung, Head of AI CIC, said, “Only China, France, Japan, and Korea have achieved 500B-level models so far, and only the United States has reached the 1,000B level. A.X K1 will be a powerful stepping stone for Korea to stand shoulder to shoulder with leading global AI nations,” as he opened his presentation.

“Bigger models are not automatically better,” Jung said. “However, in the AI world, model size directly translates into better performance and potential. The reason the U.S. is building trillion-parameter models is to understand human language context more completely.” A.X K1 has 500 billion parameters deployed in large-scale data centers, and operates as a 33B MoE model at inference time. Thanks to the large data scale, baseline performance is high, while smaller, domain-specific AI models operate for each user need, increasing power efficiency.

The project aims to achieve at least 95% of the performance of the world’s leading AI models released within the past six months, and through evaluations every six months, it will ultimately select two teams to serve as Korea’s representative AI models. Accordingly, after this presentation, one consortium will be eliminated by January 15. The project budget allocates KRW 62.8 billion to data, KRW 157.6 billion to GPU support, and KRW 25 billion to talent acquisition, with plans to complete the first development phase by the first half of 2026 and release the AI models as open source.

Before the presentation, Minister Baek Kyung-hoon of the Ministry of Science and ICT expressed expectations for the Independent AI Foundation Model project / Source=ITDongA

Baek Kyung-hoon, Minister of Science and ICT, said, “The Independent AI Foundation Model is the first gateway Korea must pass through to become one of the world’s top three AI powers. All five consortia are aiming for the highest global standards, and in this, there are no winners or losers. Whatever the outcome, the government will stand with them, and based on those results, we will work with the many companies participating in the consortia to create services and platforms and support them to expand globally.”

He continued, “Korea will establish itself as the AI capital of the Asia-Pacific region, and more AI infrastructure and data centers will be built here. Based on the Independent AI Foundation Model project, the Ministry of Science and ICT plans to support customized AI research across scientific disciplines. Through this, we will complete a Korean version of the Genesis Mission (a large-scale AI project led by the U.S. federal government) and elevate Korea’s science and technology to the next level. This project will drive Korea’s great AI transformation, and you should take pride in being at its starting point.”

Naver Cloud elite team to address complex AI environments with Omni model

Naver Cloud defines good AI as how it solves given problems and maintains stability over time. For Naver, a good model must deliver cost efficiency, high performance, and practicality. These three factors must be understood and function in an integrated way. In addition, from Naver’s perspective, “AI for All” must satisfy three conditions: ▲ Is it easily accessible to anyone? ▲ Does it strengthen the country’s industrial competitiveness? ▲ Does it reach groups that are marginalized from AI first?

Naver Cloud aims to provide AI that supports diverse requirements from multiple angles based on its omni-modal foundation model / Source=ITDongA

To realize this, Naver Cloud chose the “Omni Foundation Model.” The Naver Cloud elite team will open source the first version based on the Omni Foundation Model—the HyperClova X SEED 8B Omni model—and the HyperClova X SEED 32B Think model, which significantly enhances visual and auditory capabilities for inference-based AI, and will move into full-scale implementation of AI agents.

The Omni model is an AI model that can simultaneously understand and generate multiple types of data, including text, images, audio, and video. The HyperClova X SEED Omni models are built from the ground up to understand data in omni format. Sung Nak-ho, Head of Technology, said, “There is no need to separately connect a text-based Large Language Model (LLM) or Optical Character Recognition (OCR) model. The AI itself reads charts directly and organically understands information. When processing information, there is also no need to call multiple models or go through complicated steps, which makes deployment into new environments flexible. To widely apply AI in industrial settings, an omni model is essential to achieve both performance and cost efficiency.”

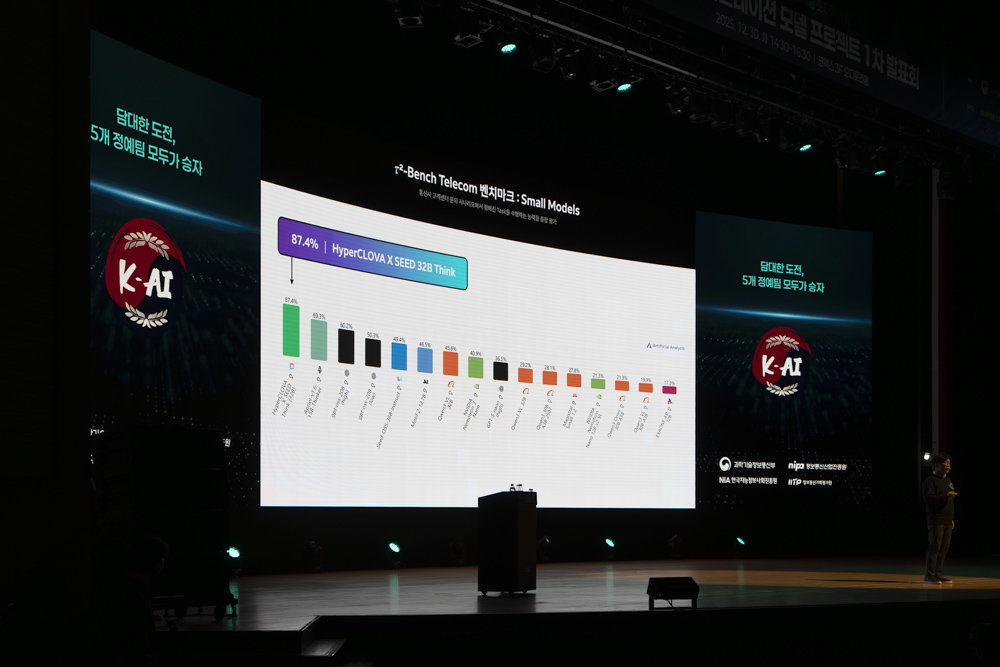

The HyperClova X SEED 32B Think model demonstrates very strong language capabilities / Source=ITDongA

In terms of performance, the models meet the target of achieving 95% of the latest global models. The Korean-language performance of the HyperClova X SEED 32B Think model is higher than that of InternVL3_5-38B Thinking, a multimodal LLM that adopts native multimodal pre-training. For vision recognition and AI agent execution capabilities, it also outperforms the Qwen3-VL-32B Think model. When given only photos of college entrance exam questions, it automatically recognizes the text with OCR, calculates answers, and achieved top scores (Grade 1) in all subjects except for Korean language (Grade 2). The smaller 8B model also supports omni capabilities, enabling cross-modal input and output of text, images, and audio.

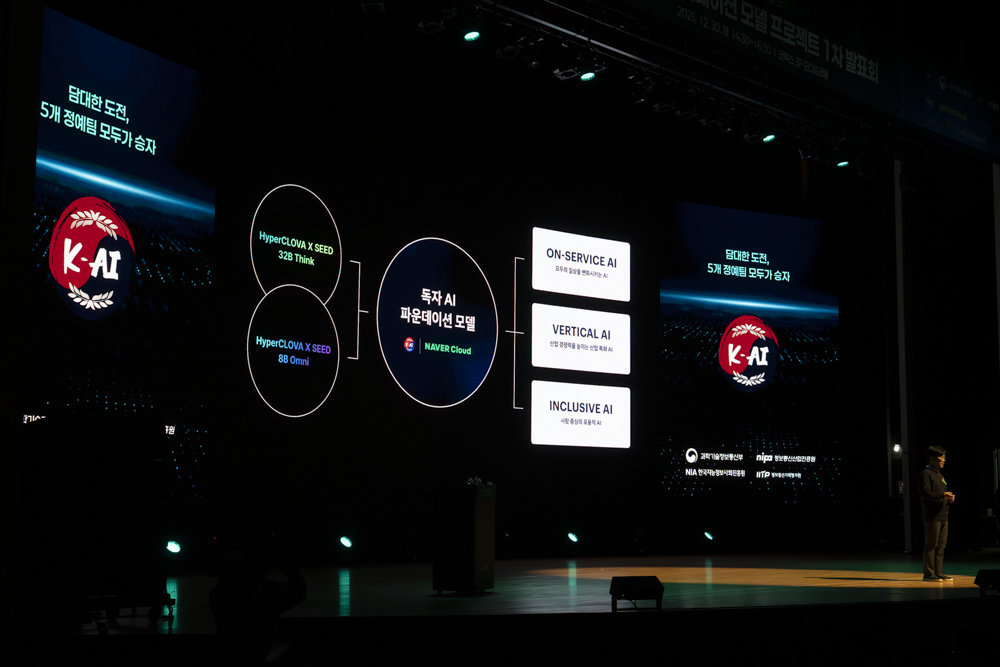

Naver Cloud plans to build on-service AI, vertical AI, and inclusive AI based on its Independent AI Foundation Model / Source=ITDongA

Naver Cloud will use the 32B and 8B models to expand HyperClova’s omni-model business. Sung Nak-ho said, “Rather than building separate AI systems for each industry and service, a more efficient approach is to first complete a large-scale omni model and then derive lightweight variants.” He added that, from an AI agent perspective, Naver will focus on ▲ on-service AI for everyday life ▲ vertical AI tailored to each industry ▲ human-centric inclusive AI.

On-service AI is already being applied to the Naver portal, and in February next year, in cooperation with the Ministry of the Interior and Safety, Naver will provide an electronic certificate agent and a GongyooNuri reservation agent via Naver TalkTalk. “Naver’s sovereign AI has been built on a growing ecosystem and a full-stack AI architecture,” Sung said. “Naver Cloud aims to extend the benefits of AI to all citizens and expand business opportunities. Through this, we will create AI that improves individual lives and enables industries to grow together.”

NC AI to compete with sector-specific AI built on VAETKI LLM

Lee Yeon-soo, CEO of NC AI, said, “Our definition of industry-specialized AI is whether it can provide domain-specific expertise and be flexibly utilized. At the same time, we support on-premise deployment to protect corporate data, and instead of simply maximizing parameter counts, it is important to provide accurate, evidence-based responses. Together with 14 consortium and partner institutions and more than 40 demand-side organizations, NC AI has built VAETKI, a ‘vertical AI engine’ for core industries.”

NC AI unveiled VAETKI, an AI model designed to actively respond to conditions in each industry / Source=ITDongA

VAETKI aims to be used even for physical AI across various industry sectors. Through the first phase of the project, NC AI has secured a 100B-parameter model along with Korean-language data and industry-specific data, and is generating results through 28 industry diffusion projects. For example, with InterX, a specialist in AX (AI transformation) for manufacturing, NC AI supports smart factory transformation of Korean SMEs in manufacturing, and it also collaborates with Hyundai AutoEver on industrial AX. In addition, it is carrying out logistics AX projects for global distributors, including POSCO and Lotte, and is developing specialized models in aviation and construction as well.

In the public sector, NC AI is building multimodal intelligent control and safety ecosystems in four hub cities and is working with the Army AI Center on defense innovation projects. It is also collaborating with the Korea Creative Content Agency and the broadcaster MBC on cultural content.

VAETKI branches into four models, from a maximum 100B base model down to 20B, 7B, and a 7B vision model / Source=ITDongA

The completed VAETKI model branches into four variants to ensure efficiency and scalability. CEO Lee explained, “We are responding to a variety of scalability needs with a high-performance 100B-A10 MoE (Mixture-of-Experts) model, diffusion-type 20B-A2B MoE and 7B-A1B MoE models, and a diffusion-type 7B-A1B MoE VLM model that uses a dedicated vision model,” adding, “For data acquisition, we validated stability at up to 20 trillion tokens and focused on securing multimodal and field-specific data. We further refined performance using 14 types of multimodal data, including analysis of the Korean manufacturing industry.”

A key feature of the VAETKI AI engine is its combination with other AI models. “Barco,” which combines the VAETKI LLM with a 3D generation model, builds 3D assets and objects from text input alone, shortening generation work that previously took more than four weeks to under 10 minutes. This can be widely used in cultural content industries and industrial sites. When combined with sound, the LLM automatically infers, generates, and applies appropriate sound effects for a given situation.

NC AI plans to ultimately pursue a 200B model and further diversify its models / Source=ITDongA

Lee said, “Through the first phase, NC AI has built a 100B model. In the second phase, we will develop a 200B model, in the third phase we will focus on industry-specialized and diffusion-type LLMs, and from the fourth phase onward we plan to create lightweight, diffusion-type models based on multi-scaler and multimodal packages. Our policy is to provide AI models quickly and efficiently based on validated architectures. We will also build domain AI for non-experts and contribute to the spread of AI across industries.”

Startup elite team Upstage showcases capabilities with live demo

Kim Sung-hoon, CEO of Upstage, took the podium / Source=ITDongA

Kim Sung-hoon, CEO of Upstage, said, “For five years, I have led Upstage with the goal of building innovative AI that benefits everyone. Just as we started developing AI, LLMs emerged and we began developing the Solar LLM, successfully progressing from the initial 10.7B model to Solar Pro 2. Normally, we would now be aiming at the 30B level, but through the Independent AI Foundation Model project, we completed a 100B model,” as he began his presentation.

He continued, “To avoid wasting taxpayer-funded GPUs, we worked with Rablup to jointly optimize the training process. We built a system that automatically detects failures and restarts immediately, reducing recovery time by more than half. By optimizing the kernel and training code, we significantly increased tokens and training volume, enabling more than 200 million training iterations.”

Upstage built a 100B model immediately after Solar Pro 2 22B / Source=ITDongA

The Solar-Open-100B model has 102 billion parameters, with 10.2 billion parameters activated per query in an MoE structure. Kim said, “We are conducting blind tests against other global models. The model captures subtle nuances and sincerity in Korean and provides concise yet well-ordered explanations,” adding that it also supports complex features such as deep research, as provided by global LLMs, and generation of slides (PPT) and reports. Although major announcements are typically delivered through pre-recorded videos, Kim instead showcased real-time services on-site to underscore the model’s maturity.

Upstage is blind-testing outputs via a closed model and plans to open source the model soon / Source=ITDongA

The Solar-Open-100B model is publicly available for free on Hugging Face, and is currently being commercialized within the consortium. “Makinarocks uses our model for drafting business plans, VUNO in healthcare, Flitto for ultra-personalized AI translation, and Law&Company in the legal domain,” Kim said. “Nota, Rablup, and Orkestra are also using it for their respective services, and Day1 Company is planning a national hackathon built on the Solar LLM.” He added, “We will also partner with LLM company Allganize for business in Japan.”

Kim concluded, “To share our experience in building AI, Upstage has selected 10 model creators and is making their content freely available. We also provide Solar-Open-100B and all Upstage solutions free of charge to schools and non-profit organizations. Having achieved deep research capabilities with our 100B model, we will not stop here—we aim to reach 200B, 300B, and multimodal models. Together with our consortium partners, we will ensure that Korean AI stands tall on the global stage.”

SK Telecom bets on ultra-large 500B model

SK Telecom’s elite team includes Krafton, 42dot, Rebellions, LINER, Select Star, Seoul National University’s Industry-University Cooperation Foundation, and KAIST, and participates in the project under the goal of “developing next-generation transformer-based ultra-large models integrating language and multimodal actions, and implementing K-AI services.” In terms of size, the team has built a 500B-parameter model, the largest among the five consortia. Seok-geun Jung, Head of AI CIC, said, “Only China, France, Japan, and Korea have achieved 500B-level models so far, and only the United States has reached the 1,000B level. A.X K1 will be a powerful stepping stone for Korea to stand shoulder to shoulder with leading global AI nations,” as he opened his presentation.

SK Telecom is leveraging the 500B A.X K1 model—the largest in the project—as its volume-driven strategy / Source=ITDongA

“Bigger models are not automatically better,” Jung said. “However, in the AI world, model size directly translates into better performance and potential. The reason the U.S. is building trillion-parameter models is to understand human language context more completely.” A.X K1 has 500 billion parameters deployed in large-scale data centers, and operates as a 33B MoE model at inference time. Thanks to the large data scale, baseline performance is high, while smaller, domain-specific AI models operate for each user need, increasing power efficiency.

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News