AI Big Tech

Amazon Joins Google in Heated AI Chip Race

Dong-A Ilbo |

Updated 2025.12.04

Big Tech Challenges Nvidia's Dominance

Amazon Unveils Chips with "Higher Performance, Lower Power"

Google Boosts TPU's 'Performance Efficiency'

Custom Chips Developed for Model Structures… Nvidia, Holding 90% Market Share, Says "Still a Long Way to Go"

Amazon Unveils Chips with "Higher Performance, Lower Power"

Google Boosts TPU's 'Performance Efficiency'

Custom Chips Developed for Model Structures… Nvidia, Holding 90% Market Share, Says "Still a Long Way to Go"

● Google, Amazon, OpenAI Venture into Custom Chip Development

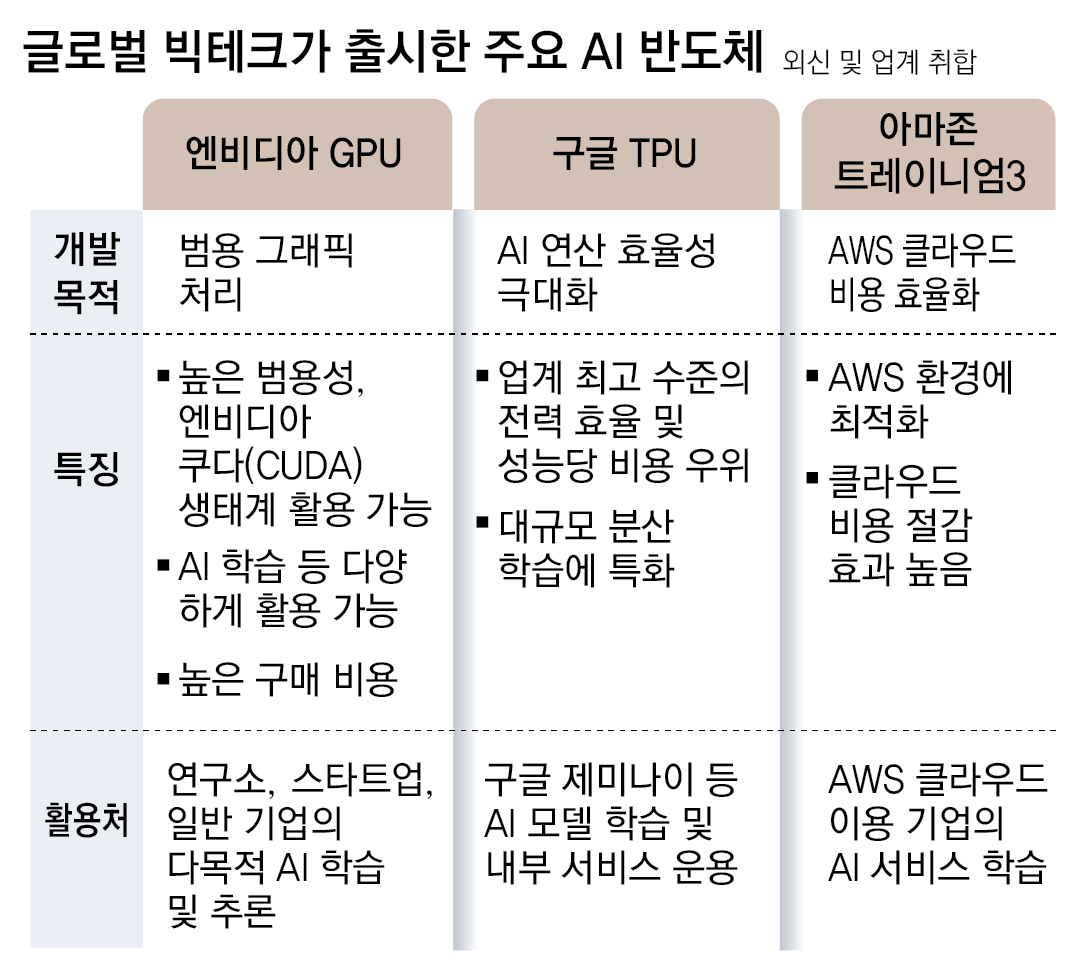

AWS announced on December 2 (local time) at the annual 'AWS re:Invent 2025' event in Las Vegas, USA, that it will officially launch its custom AI chip, 'Trainium3'. AWS explained that it has released an ultra-server equipped with up to 144 Trainium3 chips, which is available starting today.

According to AWS, Trainium3 enhances computational performance by four times compared to its previous generation chips while reducing power consumption by 40%. AWS stated that using Trainium3 can reduce AI model training and operational costs by up to 50% compared to systems using equivalent graphics processing units (GPUs). Matt Garman, CEO of AWS, emphasized in his keynote speech that "Trainium3 offers the industry's best cost efficiency in AI training and inference."

Google's self-developed Tensor Processing Unit (TPU) also boasts low power consumption and reduced operational costs as its strengths. The TPU, which recently received acclaim for powering Google's AI model 'Gemini3', was developed by Google in collaboration with the U.S. semiconductor fabless company Broadcom. AI startup Anthropic plans to use up to one million TPUs to develop AI models, and Meta is reportedly introducing Google's TPU in its data centers. OpenAI has also decided to jointly develop custom AI chips with Broadcom for training and running its AI models, including ChatGPT.

● Replacing Scarce GPUs

As AI investment expands globally, NVIDIA, which first introduced GPUs to the market, has emerged as the dominant force in this market. NVIDIA holds a 90% market share in the GPU-based AI chip market. Moreover, each GPU is expensive, priced between USD 30,000 and 40,000 (approximately KRW 44 million to 59 million). Considering power costs, big tech companies have concluded that introducing dedicated chips optimized for specific computations is advantageous in the long run.

The differing service characteristics of each company have also driven the development of custom AI chips. For instance, AWS needs AI chips for cloud services, while Google requires them for training large language models (LLMs) like Gemini. While general-purpose GPUs can handle most computations, dedicated chips designed for a specific company's model structure can perform the same computations with lower power consumption.

However, many in the industry believe that NVIDIA's monopolistic position in AI chips will not be immediately shaken. The current global AI research and development (R&D) environment is built around NVIDIA GPUs and NVIDIA's software platform, CUDA. Considering the scale of already invested infrastructure and conversion costs, the likelihood of switching to other AI chips in the near term is low. NVIDIA recently acknowledged that "Google has made significant progress in the AI field," but expressed confidence that "(our products) are a generation ahead of the industry."

Lee Min-a; Park Jong-min

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News