AI Chip

Google TPU Surge Boosts Samsung, SK HBM Demand

Dong-A Ilbo |

Updated 2025.12.01

Gemini 3 Threatens ChatGPT Performance

Increased TPU Use in Training and Inference

Rising Demand for DRAM and NAND Flash

Profit Growth Expected for Domestic Semiconductor Companies

Increased TPU Use in Training and Inference

Rising Demand for DRAM and NAND Flash

Profit Growth Expected for Domestic Semiconductor Companies

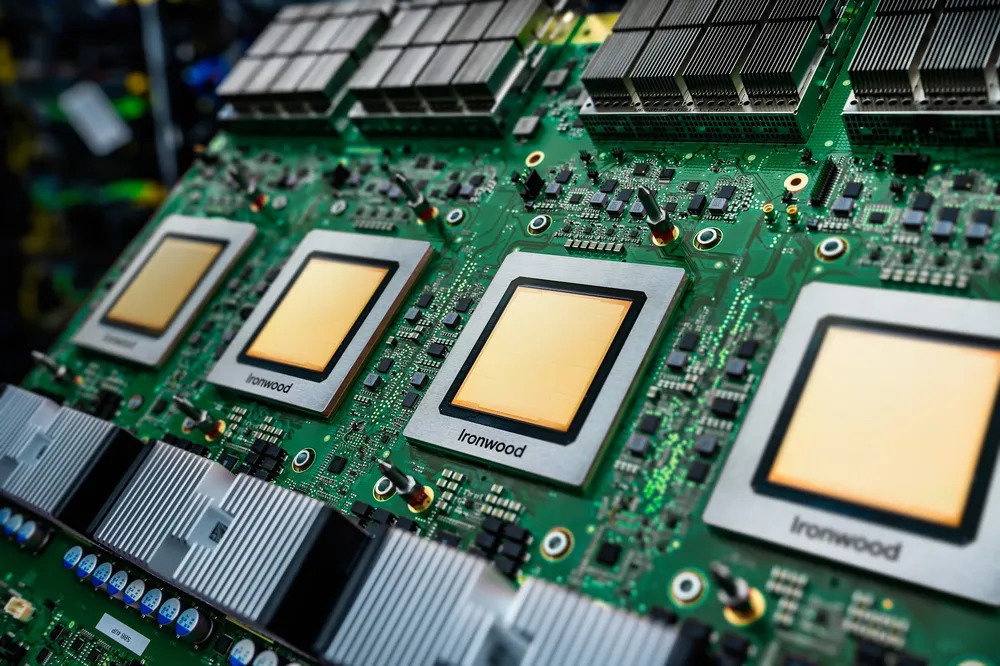

According to the semiconductor industry on the 30th, Google's new AI model 'Gemini 3' has demonstrated performance that challenges OpenAI's ChatGPT, a leading generative AI, causing a rise in TPU's market value. The TPU, an AI chip that spearheaded the learning and operation of Gemini 3, was developed by Google in collaboration with the U.S. semiconductor fabless company Broadcom. Like the graphics processing unit (GPU) made by NVIDIA, each TPU is equipped with 6 to 8 HBMs. As the TPU market grows, memory semiconductor companies can increase their supply.

The semiconductor industry views the relationship between GPUs and TPUs, both used as AI chips, as more 'complementary' than 'competitive'. GPUs excel at processing large-scale computations simultaneously, making them useful in creating and experimenting with new AI models. In contrast, TPUs, with their simple and repetitive computational structure, achieve high efficiency in large-scale learning and inference. Due to these characteristics, it is analyzed that global big tech companies are likely to use GPUs for developing new AI models and TPUs for learning or inference in the future.

The recent intensification of GPU shortages is cited as a factor driving TPU demand. As the global competition for AI service development expands, securing NVIDIA GPUs is becoming increasingly difficult. Consequently, big tech companies may also utilize TPUs. Indeed, it has been reported that Meta, Facebook's parent company, is discussing a multi-billion dollar TPU purchase with Google, further heightening market interest.

If the TPU market expands, the demand for HBMs, as well as DRAM and NAND flash, will increase, leading to direct benefits for memory semiconductor companies like Samsung Electronics and SK Hynix. According to market research firm Counterpoint, as of the third quarter (July-September) of this year, Samsung Electronics ranked first in global memory market sales (approximately KRW 27.67 trillion), followed by SK Hynix (approximately KRW 24.96 trillion). The two companies account for 60-70% of the global memory market.

According to the semiconductor industry and securities firms, Samsung Electronics and SK Hynix are known to supply over 90% of the HBMs used in Google's TPUs. Additionally, there is a high possibility that the next-generation HBM, HBM4, will be equipped in the 8th generation TPU to be unveiled next year. If realized, the profitability of domestic semiconductor companies could increase further. A semiconductor industry official predicted, "For Samsung Electronics, which struggled with sluggish HBM sales until the first half (January-June) of this year, the expansion of TPU demand could significantly enhance performance recovery."

TPU (Tensor Processing Unit)

An AI-specific processing unit developed by Google. It can process the learning and inference of generative AI at high speed and efficiency. Although it is less versatile than a graphics processing unit (GPU), it is evaluated to be more efficient in power and cost for repetitive learning and inference tasks. Like GPUs, each TPU requires 6 to 8 high-bandwidth memories.

An AI-specific processing unit developed by Google. It can process the learning and inference of generative AI at high speed and efficiency. Although it is less versatile than a graphics processing unit (GPU), it is evaluated to be more efficient in power and cost for repetitive learning and inference tasks. Like GPUs, each TPU requires 6 to 8 high-bandwidth memories.

Lee Dong-hoon

AI-translated with ChatGPT. Provided as is; original Korean text prevails.

ⓒ dongA.com. All rights reserved. Reproduction, redistribution, or use for AI training prohibited.

Popular News